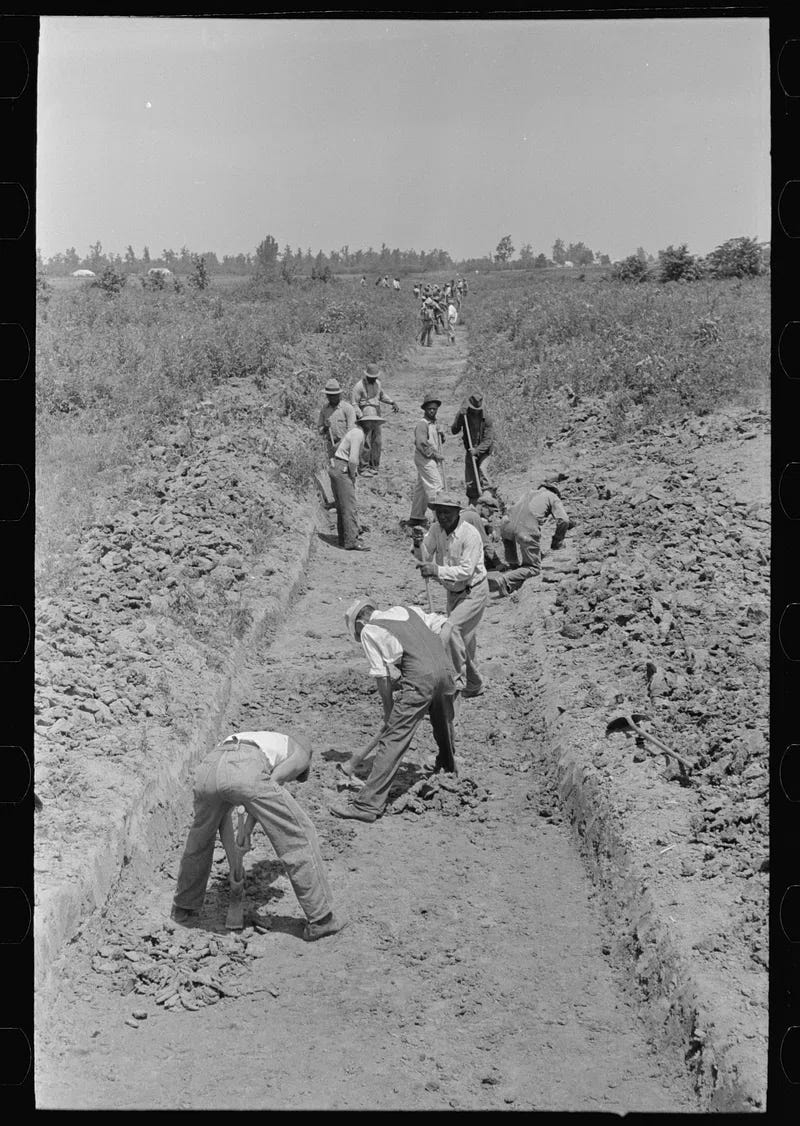

What If IT is Valued Just The Same as Ditch-Digging?

Men and women with shovels or keyboards? Doesn’t make a difference—it’s all the same for the enterprise.

TL;RD: “Value” is not well-defined in enterprises and they want (as in old-school manual labor) high headcounts because it gives work the veneer of “looking efficient”, important, and valuable. However, since technology is a socio-technical field based on knowledge work, this model instead results in low impact, high friction, and essentially ending up being a garbage-and-cost-generator rather than a value generator. Ultimately, “IT” is a construct of poor strategy and an ill-defined concept of value. This is one of the foundational reasons I believe enterprises fail at digital products or digital value-generation altogether. I propose that it may be true that they are wired to resist any change.

The existential terror of “value”

Given the option to purchase something on a price tag set by the “value” the thing generates for you, or by the hour, which would you choose?

Value-based pricing has been a bit of a fad and I’ve had some experience in one of my previous jobs to try to sell it in.

Long story short: We never managed to get anything sold AFAIK using that model.

I’m not saying that it doesn’t have a place, but hey, if the customer doesn’t even have any conception (nor corporate framing) for defining or knowing value, then of course: Why would they buy it that way?

So that was a dead end, to say the least.

“Value” and “value-based” borders on the existential: “What is value? Value for whom? Is it universal or is it subjective? Who gets to define it?” and so on. The questions come, yet the answers remain obscure, hidden. As a Swede, I will testify to the fact that most Swedes abhor existential questions and have practically institutionalized “freedom from conflict and discomfort” and academic bildung away from the national ethic. So any answers here, I assume, will stay elusive for quite some time still.

Tip: Read the below link as a starter on your journey to find your answer.

What is value? We all know that the key to a winning, high-performing organization is aligning our work with delivering…itrevolution.com

My reflection of the day goes to the predicament of developers, or rather their place in the great (often corporate) world of IT and digital. I won’t even call it tech, because I’d simply assume these problems don’t even make sense for such contexts. Note: Not singing any praises, just trying to put a line in the sand.

No, my experience is within bigger organizations so that’s what I’ll rant about.

Let’s set the scene:

“IT” is what software development becomes when exposed to the radiation of enterprises, malforming it into something ontologically different from what software development is actually about.

As the title makes clear, I find that it’s true that

IT is valued just the same as ditch-digging for most enterprises.

That’s to say:

- “IT” is measured by numerals; hands; heads. If possible, they are physically present, none of that remote shit. Ergo, a small IT headcount is indicative of a weak leader. You don’t want to be a weak, simple underling—to project force and resolve you hire every pair of hands you can find.

- “IT” simultaneously is, and is not, the digital labor force of your organization. You want them to shut up and obey and fix the printer, but you are also a benevolent and modern ruler, so you want them to also code stuff for the grown-ups in other departments. They are not treated with any amenities or other materialism, they should already be happy for their slightly higher pay for providing their skilled labor. Yet, they are treated as unskilled staff.

- “IT” delivers stuff; others command it to do so. Being last in line, your work in the IT labor force is to be a problem-solver, not to ask questions. Leave that to the grown-ups. If ideas come upon your table as a lowly developer, in the shape of misspelled single A4s with less than 150 words of content for your new hyped super-important system, and you don’t know what the fuck to make of it—keep it to yourself. “It’s too technical” to answer and they stop returning your calls. Think of job security. Just guess your way to a solution. You won’t get answers anyway.

- The ditch-digging fetish is a major turn-on. The sweat on the brow, the labor, the finely lined-up servants in their cubicle rows… Squint your eyes and feel a hot waft of air from the AC and you can practically imagine the laborers toiling on each side of the new state highway. Again, the value is made manifest through the organization chart, the sheer headcount, the sound and rumble of toil, and the number of sync and alignment meetings filling up Outlook blocks—a far cry from how Agile, DevOps, and even overall, sensible empirical approaches would measure value. But again I ask, if there is no better concept of value, then is this model not vastly superior to having no concept of value? I’ll leave answering that for you as a rhetorical exercise.

Hence, busyness becomes your business, under the whip of a command-and-control structure. And many have accepted and internalized that state.

We can control this…can’t we?

You perhaps already know that the majority of IT projects (i.e. in the enterprise context) fail. If not, see for example:

Successful projects are characterized by less bureaucracy in governance arrangements and greater focus on outcomes.www.gartner.comWhy IT projects still fail

Despite strategic alignment among IT and business leaders, technical and transformational initiatives still fall flat…www.cio.com

Despite the depressing facts and numbers, enterprises seem to push on, carrying on the same sins time after time, resulting in “leading voices” on public social media (say LinkedIn) writing tons of content about how to address this with:

- Culture change

- Vertical integration (business and IT join forces)

- DevOps (more agency and responsibility)

- Agile (faster cycles)

- Attacks on non-Agile “Agile frameworks” (SAFe etc.)

- Training and onboarding (sharing a vision, goals, same-ish skills)

- Software engineering vs plain development

And a lot more, and that’s just fine. I’d even argue, on a point-by-point basis, that these areas (and others) are correctly identified as needing improvement and there’s a lot of wholesome work to be done, in order to improve them. Blessed be ye, who have the hearts of angels and infinite patience to conduct that work.

In my experience and thinking, organizations struggle with digitalization for at least 3 major reasons:

- Cost issues lead to uncontrollable overruns. You think FinOps will save you? FinOps is not enough to solve this, if you also lack agency — awareness does not necessarily lead to action, as you might know.

- Lack of competency (and lack of culture) means having to suffer from incompetent staff or a subpar training on the job program. You think building a culture of competency will save you? Onboarding, training, and interviewing are only damage control.

- Non-integration (of processes, culture, DevOps…) leads to fragmentation, friction, attrition, bad morale, and more bad things piling up. You think hope or providence will save you? Organizations have incredible difficulty adjusting to the speed of digital — hope is not a strategy. Even with providence, available evidence and anecdotes tell of multi-year transformations.

But I don’t believe, even given the above areas of improvement on their own, will cut it in terms of fundamentally rethinking why you have a development workforce, because you are fighting the battle from below, not from above (where authority exists; non-PC remark, yes, but let’s be honest for a second) nor from the “side” where you displace or even disrupt the basic assumptions altogether.

Seriously, what is one to do?

Of course, it certainly doesn’t have to be this way. There are many organizations and businesses that set good examples for the rest.

All of the above presumes that we are able to fix the competency and culture issues and fully and vertically integrate digital and IT capabilities in an org while retaining cost control. Maybe you could! But what if there is something even better, if we go outside of the headspace we often get stuck into—so, let’s zoom out one more step.

Consider these extreme opposites:

- An organization with practically only developers.

- An organization without developers.

If your value is absolutely 1:1 correlated with software, then the first one seems kind of right. Just look at how basically any meaningful tech company started (and is still operated today). Certainly, over time and with creeping bureaucracy even giants like Google over time lower their engineers-to-rest quota. That’s “IT” happening and rotting them from the head down, like with dead fish. Given a smaller scale, though, this engineering-focused model will work. Do you make your money from tech? Good. Fire practically every non-technical person.

Maybe it’s worth recalling how software engineers are distinguished from software developers. There are lots of articles on this, but the linked one should set some basic parameters for you: Engineers have deeper and wider competency and responsibility, while developers could be any code monkey able to wrangle some programming tasks.

In an organization with heavy, existential dependence on their technical skills, software developers (i.e. code monkeys) won’t be enough. You need to have a software engineering organization in place that caters to optimal flow, and all the things expected to otherwise make maximum value from software. But I digress.

In the other example, let’s imagine there are zero developers. This would account for the vast number of organizations and businesses. This is not a “special” state—in fact, this is the norm. Unless you’ve worked in FAANG all of your life, you know this to be true because that non-FAANG company is probably the one paying your salary while you’re reading this.

In this case, it would be common to (up to a point) entrust your needs to agencies or contract workers. While I’ve found some of these relations to work out better than others, it seems to be a universal fact that organizations of this type very seldom “get” what it means to be digital. It’s almost always driven from a project perspective (finite, well-defined timeframe and requirements) and the work is never central to their existence, even though it may be important (a key distinction between these two words).

The benefits of consultants (and agencies, etc.) include that you lower the risk and liability in terms of organizational change, culture change, and integration of digitalization in your business model. You can effectively remove that problem to a mercenary “solving the problem or project” for you. But it won’t, and can’t, change your organization—no consultant ever did. I’d argue you are willingly paying to avoid that existential change, even if there is increased friction when conducting the work (project, typically). This might in fact be a very smart thing. Keep doing that if you are not dependent on internalized digital competence. You probably aren’t.

Both of these extremes are better than the stalemate played by most conventional organizations with large IT staffs, where an uncanny, bizarre middle-ground is the reality: An impoverished software engineering organization, no clear value generation, no agency or product ownership, and so on. It’s the Twilight Zone of technology and no one really likes it there.

Calling bullshit

I want to spend a few minutes on a few bullshit concerns relating to our general theme here.

More staff is most likely not better

You want more staff… why on earth would you want that?

Maybe you assume you get more things done. That seems reasonable in principle. But let’s consider and ask ourselves a few questions first…

- We know that, empirically, larger groups will significantly underperform compared to smaller groups.

- The costs will balloon. Who carries the cost? Every “yes” is 99 “no’s”—what 99 things are you saying no to?

- Have you looked at the efficiency of your flow and streams of work before asking for more people? Identify and optimize first.

- Is there a linear (1:1), sublinear (i.e. 1:0.5), or superlinear (i.e. 1:3) relation to value generation with more, or fewer, people? If you don’t know, stay away from asking for more people until you can prove it makes sense to get more heads on staff. Obviously, if you are “losing out” by getting people, don’t get more heads 🤷♀️

- What is the competency you actually need in your context? Is it really, truly, developers/engineers, or something else? Developers can’t solve all problems.

- Where are the competency frictions? For example: Are Business Analysts technical enough to actually competently do their work (be honest now)? Do developers have the necessary architecture competency to design the overall solution? Maybe you need fewer (or the same number of people) but with other skills? Don’t assume it will be a generic do-it-all developer.

- Are there process frictions? Is the team delivering based on pre-defined requirements, with little technical early input and mandate?

Now for an “IT” nightmare… Let’s consider that your boss—the CXO—puts more people in the department. Even in this case, you should raise the same questions, though maybe this time you will have to accept the new reality. An opportunity to train on that stiff upper lip, old chap!

🕰️ Ding-dong! Why’s that clock ringing? That’s your fate making you aware that it’s time to look for a new job if that happens.

Software doesn’t fail because you have too few developers

People will reflexively complain that they are too few to deliver work on time. This is probably not true.

- Your developers aren’t typing too slow; software delivery is not contingent on typing speed, it’s dependent on completely different factors, often relating to process, agency/ownership, and timing.

- Your developers aren’t overburdened with work; you are prioritizing wrong or don’t have authority over your time, or you’re messing up your work-in-progress limits.

Very often, I see that the process around development is slow and ill-functioning, and that developers are entered very late in the process, leading to them not really grasping what needs to be done. Or they can’t work in an efficient manner, because of their own making or someone else’s.

Software isn’t industrialized enough yet

Often we see organizations doing software development in an essentially artisanal mode—instead, we should look at even further industrializing it.

Industrializing will mean the loss of jobs or at least substantial changes to how the work is conducted. That’s evident in how, for example, QA engineers are moving uphill to more complex training of engineers, rather than plainly writing tests, like they used to do.

Another example is how CI/CD dramatically industrialized the building, testing, and releasing of software. It won’t, and shouldn’t, end there, especially as these are not new things—it’s not the future, it’s the past!

There is no single certification, standard, way of working, or anything else that tells if a person is a competent software developer/engineer, architect, “IT person” or anything else. Industrializing software won’t be done globally across all businesses etc. (unless there is some complete and total sea change happening), but it should be done locally, when considering what parts are often frictional, failing, missing, and poorly done parts of the work we commonly struggle with. Especially anything to do with handovers and such. Those are easy wins.

It’s clear to me that software engineering, as a discipline, has a lot of best practices and so on to support industrialization. But it seems very much missing in the design, architecture, and feasibility stages, for example. This area—the generative front end, as it were—in particular, is ripe for improvement.

Exciting directions for the future

Make your whole organization part of the “software” team. If software affects the entire org, then we can’t simply hyper-optimize it locally for the one single developer persona responsible for it today.

Is that not the promise made by low-code/no-code (LCNC) tools like Glide, Retool, and Bubble? And while those are growing better every year, many such tools still struggle with elementary features of a development workflow (think CI/CD, tests, version control), and still remain somewhat inaccessible to users who may, for the first time, encounter typical programming and computer science concepts, not to mention design patterns (to some degree, given the limited code access) and concepts like separation of concerns and reusability.

I’ve heard enough times from people around me how LCNC tools and “citizen developers” have brought ruin to perfectly sensible software creation. I accept that, and I can definitely see why.

There is still a heavy, sometimes latent skills gap represented by these tools. They try to make the essential complexity and basics of computer science and software engineering invisible, and therefore lose what makes software software. This can improve with better UX, design, and mental models, as well as standard-fare things like tutorials.

With that said, there’s no need to discount the possibility of this space to grow a lot over time. Further, we are starting to see tools like Qlerify (a fairly new startup) and eventualAI (a startup that’s now unfortunately closed) trying to set a different spin on how to approach the development and conception of software.

Qlerify starts with modern best practices like event storming and a Domain Driven Design-wrapped concept to steer business process modeling first, ultimately flattening the otherwise tedious movement from discussion, discovery, post-it note writing, into modeling the technical landscape. All of this becomes significantly sped up here compared to a physical flow. Or in their own words:

Qlerify combines a co-authoring workspace with AI autogeneration of content, process modeling, data modeling, requirements gathering, and backlog management — all through a single user-friendly interface.

However, AFAIK it doesn’t do the next logical step: The generation of code (maybe, thankfully so, given the wobbly state of generative AI).

What was exciting with eventualAI was that it aimed to marry the modern approach of infrastructure-as-code with “business intent”, coated in a DDD package. As they called it: “Business requirements in, cloud service out”. A really sweet proposition that starts at the important stuff, not making software (only) a developer problem.

At least for me, this starts to point at a nascent future in which we begin the process of forming software (prior to development!) based on the real problems, democratically pushing down decisions to the actual stakeholders while making them accountable and “in-the-loop”, as well as eliminating big chunks of the undifferentiated plumbing work that many developers do.

I just don’t see big generic development teams (in their hundreds) plumbing integrations all day long in a few years.

Escaping the ditch

Ditch-digging is about valuing the sweat and toil (energy output) rather than the outcome.

It seems to me that one of the absolute differences between organizations who “get” technology and those who think of it as merely ditch-digging is that

technology-aware organizations understand and mine the explicit, known value of software, while technology-illiterate organizations struggle with high costs of doing what is, at the end of the day, non-value-adding work; but at least there is a lot of it!

As simple as that. You might even crassly say if tech makes the bottom line black then it’s a tech org, if you’d like, though that’s a bit narrower than what I’m reflecting on here.

Compared to hardware, software is “soft” because it changes and can be actively and intentionally changed. In a sense, software is change, or at the very least the relation between both concepts is correlated to a very high degree.

Let me share some of what I’ve learned about software development.

- Software development, like any business venture, is predicated on generating value.

- Software development is never the goal of value generation, it’s (maybe) the start.

- Software development can benefit a huge number of organizations but the benefit is neither unconditional nor guaranteed.

- Software development is the business or at least highly entangled with it, in all but trivial cases.

- Software development is ill-understood by those not directly working with it.

- Software development is often misunderstood where it does exist.

- Software development, in an organization, must impact its operations for there to be actual, honest-to-God proof of digitalization being at play. If it does not, don’t build software.

- Software development is not, despite the name, fundamentally predicated on the role of there being any software developers, as evidenced by how tools and paradigms are evolving (IPaaS platforms, low-code/no-code, generative AI, workflow-centric concepts like Step Functions Workflow Studio and Application Composer are all early indicators of this evolution happening as we speak)—thus the role or meaning of a developer is transient and changing.

- Software development openly encompasses a broader set of concerns today (in the modern cloud, etc.) than it traditionally did during previous paradigms.

- Software development competency, in the engineering sense, is not democratically distributed evenly or persistently available in all locations/markets, no matter the size of your wallet.

- Software development is often done in ways more reminiscent of “craft work” (artisanal) than “industrial work” (engineering).

- Software development can be incredibly heavy-handed, slow, and expensive even when conducted under good conditions.

- Software development is predicated on getting the flow of it right (fast, insight-generating, automated wherever possible…).

- Software development is waste-prone when mismanaged, which is the norm rather than the exception.

In short: It’s a risky business.

Escaping the ditch should start with a good old bender. Get yourself shitfaced and build up the courage to ask where you are in the process. Or do yoga, or whatever gets your mind to release those dirty thoughts, without filters:

Where are you in this spectrum of IT rot—can you still save yourself? Are you still fighting the “good fight” or are you just a soldier fighting for a lost island? What options do you see that are actionable to improve the conditions? Who are you liaising with outside of your own department? Can your organization truly change how it integrates software in its core business and make others than developer accountable for it and the “value” it supposedly brings?

At any rate, this sort of work won’t be done by standing still, unchanging, and will require courage, reflection, and insight very few show on a regular cadence.

But we all have to start somewhere to get out of that goddamn ditch.