Playing with realit[y/ies]: Can alternative spatio-physical gameplay systems break norms and conventions in avatar-centric games?

Originally written in spring 2011.

Abstract

I begin by providing a brief timeline of video game control interfaces. The games we play are dependent on an interface being used by the player. Using these controllers, players understand interactions and affordances in the games as temporally, spatially and physically mapped onto buttons on their current interface device. While the controllers (as pure hardware) have been constantly redeveloped and tested, games have included a wild variety of different interaction possibilities and representatory loops for players to become involved in but few have ventured into creating controllers that are simultaneously invisible, reprogrammable and specially developed for a certain type of game.

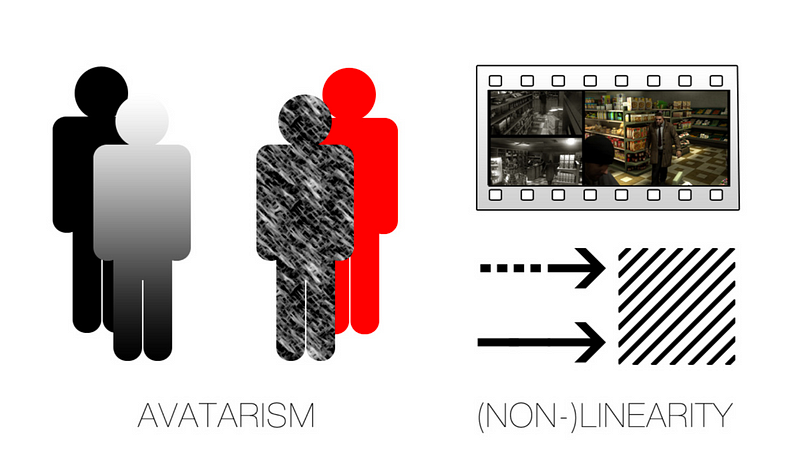

The problem is defined as both one of interface and one of content, but most of all, through the combined issues these free- standing factors create. Further, the complex issues regarding avatarism, ethics and conduct are given a basic framework to live inside — these issues will be the focal point for the development of any proposed system model that places physicality in the center.

My claim is that rethinking interface design and implementation will help in this task, based in a notion of games as normative media. A physically interfaced but digitally-mediated game-space may provide the best qualities of traditional theater and the near- infinite expandability of digital space. Using the ideas of both game theorists as well as interaction designers, I present a concept model of how this may be made possible.

1. Introduction

1.1 Just a game?

The interactor starts in a narrow corridor. Pressing the projected image of a door on a wall ”opens” the next room (projecting another room instead of the current room). The walls start mechanically moving, and in a matter of seconds the office is revealed in the new space — this time larger, and square-shaped. You look at the office. It’s old, shabby, neglected; nicotine-yellow walls reflect the bright city lights outside. This office, as all other surfaces, is optically correct from any angle, using the combined techniques of advanced depth mapping, parallax geometry and realtime coordinated lighting across all virtual surfaces. A mild smell of dust and old paper can be traced, dispersed via the environment superstructure when entering the virtual office. Sound playback takes into account the virtual acoustic space, to provide simulations of how the soundscape would actually sound if this office was real. Time is running out: you expect it’ll only take a few minutes before the landlord does his nightly round. It is indeed a game of sorts.

The papers you are looking for should be in the locked cabinet by the window. The interactor approaches the cabinet displayed on the projected walls. Detecting proximity to the only-virtual cabinet, a boolean state unlocks direct interaction with it when the interactor looks at it (a physical kind of ”raycasting” or distance detection). When the player stretches his/her arm out and flexes it — a sensor measures if it is flexed — effects are triggered in the game simulation, in this case, opening the cabinet. You manage to forcefully lockpick the cabinet’s dilapidated lock, scramble through the documents — quickly checking that they are the ones you were asked to get. Looking for the right document would be done by actually moving one’s hands, similar to how the Wii uses simplified motions to recreate more complex ones in-game. You leave just in time for the landlord to turn the corner, walking towards the now empty office. As the interactor finally moves toward the door for a quick exit, he/she needs to move their real body. Movement is correlated 1:1 since distances are spatially recreated in scale. The game is over — for now.

1.2 Background

As the number of free-form, open-ended games have started to grow exponentially, it seems that even less reflection is taking place about the conditions in which these games exist, in the physical, corporeal sense. Obvious consequences of a mediated second reality is that the controls which are used to manipulate the game-simulation are always privy to certain facts of media. The controller-pad cannot have any number of buttons, the physical placement of these must correspond to locations that match how the hands hold and can move its fingers and thumbs. Because the quest for free expression through games has still not yielded very impressive results, it is time to re-focus attention beyond the mere contents of games.

My main argument is that the controllers available to date are matched to the contents and possibility spaces conveyed in modern and classic digital games — systems that over decades still roughly concern only a few, gameplay-weighted actions based around challenges. By utilizing ideas from HCI and interaction research, I present a few ideas of how conceptual interfaces may change the representational spaces and interactions allowed in games, in effect moving from games of competition to games of reflection and discovery. Focus could be redirected to aesthetic or sensory inputs, for their own sake, and not necessarily to guide the player dutifully through a teleological challenge repository.

Possibilities for ethical gameplay will be seen as the ultimate target in this paper, with the backing notion of ”innovative interfaces” as a means to reach such an end. I present a sketch for an invisible model suited for (limited-)spatial games with a focus on dialogue and immediate, physical events while not in any way removing digital mediation. Inspiration comes from theater, live action role-playing and experimental forms of theater. The experience could in some ways be likened with forms of place- based cultural consumption: an exclusive, somewhat unique and active form of consumption different from the any-time-any- place-with-frontrow-comfort mode of modern screen-viewed games. Hardware — or more specifically for this paper, interfaces — has, will and continues to impact what games can be made and played.

2. Brief timeline over video game control interfaces

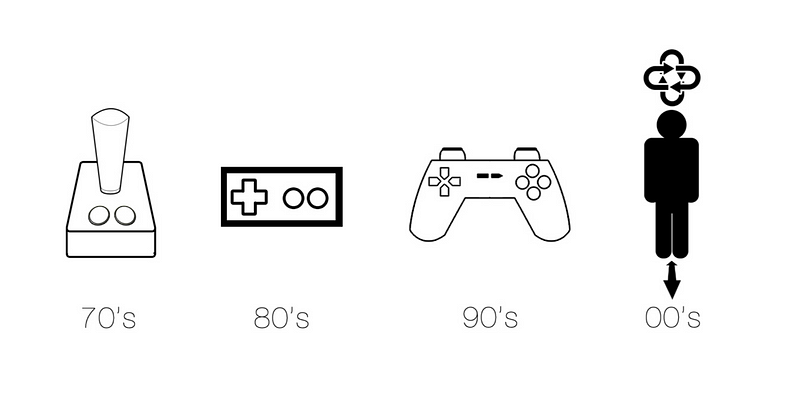

As interaction design, user experience design and the entire HCI discipline in general has become ever more grounded in the games industry, quite some emphasis has been on creating controllers that are ergonomical, simple (though not ”basic”), multipurpose and with some occasional splashes of innovative thinking for good measure.

Pong and arcade machines began taking up space in the hearts and minds of enthusiasts in the 70’s and 80’s as well as regular people exposed to the quirky cabinets placed in rest stops, pubs and restaurants. Joysticks were the most common interface. Games were still almost exclusively something found outside the home environment. These games were novel also in the business sense, because they were very hard so as not to let players loiter too long at a machine, maintaining a steady influx of coins to the machine by new wannabe-score-setters. It simply made perfect business sense to surround the gaming experience within the framework of challenges — something that has continued long since then.

Commodore 64 was one of the first really successful home computers and quite literally put the classic joystick in people’s homes. It provided players with 8-directional movement and two buttons for input. The joystick was normally seated on a table or other flat surface for support.

Nintendo launched the Nintendo Entertainment System (commonly known as the 8-bit Nintendo) in the mid-80’s with its classical rectangular controller. As opposed to the joystick form factor of the C64, a flat hand-held control pad offered thumb- controlled directional input which would prove to become the standard for decades onwards. Also, select and start buttons (”metabuttons”) were added, but the primary button count was still the same: 2 buttons for selective, direct input.

The late 1980’s and 1990’s brought an extremely intense ramping up of controller improvement, as well as some of the absolutely wildest controllers ever conceived, including the Nintendo Power Glove, Virtual Boy and the Dreamcast controller — far more advanced than what its era could cope with. Controllers like the one for the Atari Jaguar tested the limits of button count and quickly died: No one wanted twelve secondary buttons. Such staples as the dual analog sticks, pressure-sensitive triggers and controller vibration were born in this decade so most controllers today are similar to those of this era: The initial PlayStation controller has only subtly evolved in iterations for 10–15 years, and Microsoft’s first hamfisted attempt has grown to be the all- time favourite controller of countless million gamers.

The most recent home console controllers are motion-based: Devices available are the Nintendo Wiimote, PlayStation Move and Microsoft Kinect. These will be examined to some extent in this paper.

Lastly, mobile gaming has finally gotten to the point where developers, technology and gamers are all at the point of acceptance. Affordable mobile touchscreens combined with expanding WiFi networks have brought about an almost completely separate market segment and way of interacting with games and game technologies. While even the Nintendo Game and Watch and Game Boy were popular back in the 1980’s, few could have counted on the explosive growth Apple’s iPhone brought about.

3. Games as normative media

A normative approach implies some informal means by which users self-regulate their activities, in this case, their ways of playing the game. Wikipedia [1] sums it up with: ”Normative ethics is the branch of philosophical ethics that investigates the set of questions that arise when we think about the question ‘how ought one act, morally speaking?’” It is not my intention here to discuss branches (and their respective sub-branches) of normative ethics as such, but rather to direct attention on some basic ethical grounding and practical entry points for these within games.

3.1 Virtually unreal

Games occupy a very unique spot in human culture in that they are neither real nor just play [2]. Looking at established definitions and theorems of digital as well as non-digital games, two factors which will be challenged here rear their heads. Those two are ”fun” and ”competition”, although the usage of these terms may differ somewhat between different scholars and schools of thought. If the goal, as here, is in creating a spatio-experimental game with specific focus on low-intensity gameplay, utmost concentration must be payed to couple interactions with meaningful output [3] to support games that excel in immersive appeal. Immersion itself is a very disputed term, and for the sake of argument, I will here hold it to a broad definition of letting affordances and active gameplay override the artifice of the actual experience — that is, the proposed system should become transparent in its feedback loop and effectively become ”invisible” for the interactor. I am not concerned with other definitions, games, game typologies or game genres here.

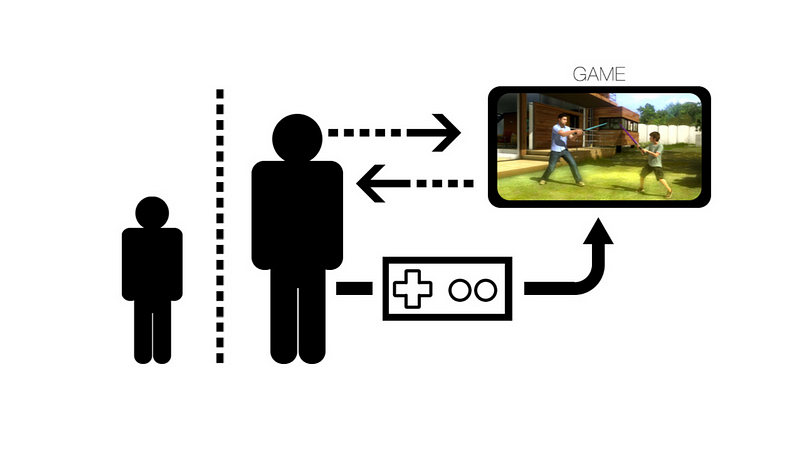

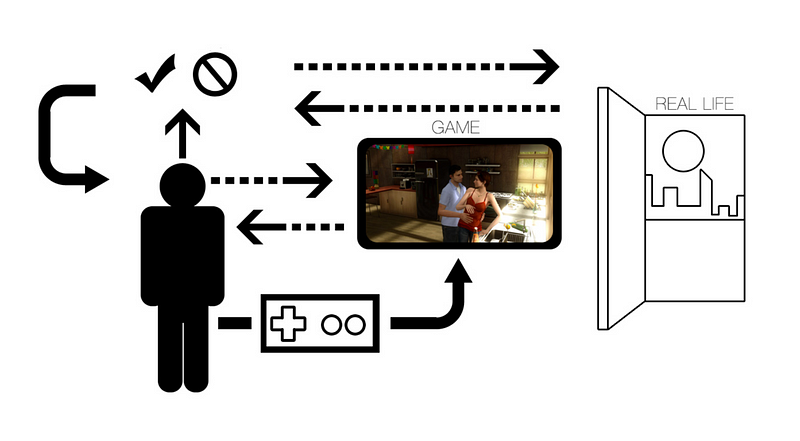

Games come with a certain degree of pre-defined doctrine, or procedural rhetoric as Ian Bogost [4, 5, 6] would call it. In short, the choices, possibility spaces and ramifications of made choices affect the player’s perception/understanding of what is OK (”legal” in programming and law terms) and what is not OK to do in the gameworld. Being the input device that taps into these secondary realities, the controller (regardless of sort) has both physical and psychological constraints as to how it may be used. While there is theoretically any number of represented actions that could be mapped onto even only one single boolean-mapped (true or false) button, there must be coupling for the player-interactor to feel that the actual action makes sense in the context of the game. Therefore, we must assume a position where the physical use is strongly tied to the represented state change; they must not be allowed to be mutually exclusive.

A game that made a very valid point about the connection between socially constructed rules and available interface- informed actions is B.U.T.T.O.N (Brutally Unfair Tactics Totally OK Now) [7] — by research and developer group Copenhagen Game Collective — where socially unacceptable super attacks are available on the actual controller, and these may be used, at least purely programmatically speaking. That is, the game has no inherent bias against a player making use of these. B.U.T.T.O.N states that the social context should discourage the actual usage of this button, so players will find themselves pariahs if they really do use it (it is after all against established social convention to gain unfair advantage) and therefore receive due punishment for any infringements on this rule. A melding between screen-space and play-space has occured.

3.2 Doing, reflecting, reapplying — what game designers must bear in mind

Game designers must remember to create games that do not enforce ideology via limited in-game actionability. New interfaces can move games from limited choice menu selection exercises into something that is transcending toward a truly tactile, performative art — emphasizing ”doing” rather than spectating — thus using a fuller range of physical and haptic feedback, so we must recognize that these new games are not necessarily created for “pleasure” as the primary stimulant. Pleasure is used here to mean the sort of feelings traditionally connoted with games and gaming: euphoria, flow, drive, lust and the often explicit goal of attaining goals or overcoming various obstacles. Certain foci on embodied, social, physical and spatial relationships need to be put in place to open up the design practices. In using an ecological approach much more reminiscent of augmented reality and mixed reality disciplines, many of the limitations of regular digital games are left out. I will adress these by means of broad statements.

3.2.1 Games simulate some range of real or unreal conditions and variables of that game-world

As one of the founding fathers of ludology (the study of play), Gonzalo Frasca famously wrote about game systems as facilitators of reflection: ”[Simulation] does not deal with what happened or is happening, but with what may happen. Unlike narrative and drama, its essence lays on a basic assumption: change is possible. It is up to both game designers and game players to keep simulation as a form of entertainment or to turn it into a subversive way of contesting the inalterability of our lives.” [8] Frasca continues that complete simulations, such as of nature, are not what a game simulation strive for. Rather, they should consist of a highly effectivized number of variables that (perhaps even emergently) create new behaviours. A simplified simulation of this kind uses the human brain’s possibility to create greater wholes from a number of incidents, that is, it deduces meaning from the relations of facts, circumstances, connections or events. Writing on games as spaces for self-fashioning ones identity, Felan Parker walks a line similar to that of Frasca while drawing on Juul: ”Expansive gameplay emerges from the exploration of a game’s rules and the spaces for play that they constitute, just as practices of freedom emerge from the exploration, contemplation and problematization of the self as constituted by the rules of society. […] Game rules are a special class of rules that reference and emulate (but also overlap with and are subject to) the kinds of rules that are lived in the real world. Reality is not just a game, and games are not entirely “real.” For the expansive gameplayer, the game becomes like a scale model that simulates, adapts and recontextualize the systems of reality.” [9] Games can be tailor- made to encourage this type of higher reflection.

3.2.2 There is a highly tactile, corporeal aspect to (the act of) gaming.

Manipulating a non-existing object with a plastic gamepad has the problem of distancing the perceived output on-screen from the actual ”hand-led” input. Some games have tried to better map the correlation between these two in order to reproduce movements such as patting, waving or stroking. A good example of this is Heavy Rain [10], where the player, in the role of a grieving family father, in one scenario can comfort one of his children. The physical movements involved are mapped to a simplified, if rather evocative simulacra. The action is in no way general purpose: it is hand-crafted for this exact scenario, which in fact, may never even be used/performed by the player. It is but one controller-exclusive interaction among what probably amounts to a hundred such events. Critics and players have lauded the way in which everyday and seemingly forgotten interactions are made to work within the game to create an experience that feels and works in a ”mature” way seldom seen in the medium. Even less poetic games, such as Quake or World of Warcraft, become deeply corporeal games where the player’s physical performance is of utmost importance not unlike traditional arts like dance. [11] The actual performance and ”doing” must be central in a physically realized game.

3.2.3 Games can evoke ethical reflection and SHOULD do so if it makes sense in the context of the game.

Markus Montola [12] concludes his report on positive negative experiences in extreme (non-digital) role-playing games with: ”As a cultural form, this kind of role-playing is not unlike movies such as Schindler’s List: perhaps unpleasant on a momentary and superficial level, but rewarding through experiences of learning, insight and accomplishment. […] The obvious conclusion is that the scope of playful experiences is broader than most models suggest, and that the digital games industry has a lot to learn in the art of gratifying through positive negative experiences.” Some digital games have of course tried to traverse these lands. Miguel Sicart, another scholar who has been deeply involved in the study of digital games as ethical systems, made the following interesting point: ”… games like Shadow of the Colossus and Manhunt are more successful in being closed ethical experiences. They are so because the act of playing, and thus of experiencing the game, involves making moral choices or suffering moral dilemmas, yet the game system does not evaluate the players’ actions, thus respecting and encouraging players’ ethical agency.” [13] Parker argues that, not unsimilar to Paolo Freire [14] and Augusto Boal [15], understanding a system is to explore ones possibilities in the real world: ”The kinds of strategies that can be employed by an individual constituted by and existing within a system of rules, and the ways in which these practices are cultivated and produced through the active contemplation of spaces of possibility, demonstrate a link between the playful, “safe” exploratory practice of expansive gameplay and the more substantive transformations enabled by a rigorous, real-world practice of Foucauldian self-fashioning. Foucault’s conception of the self differs from other philosophical models in that it can be applied within any game or rule-based construct.” [9] Ethics can go hand in hand with the broader, systematic simulation. Simple polarized ethical structures should be removed; instead, the actual consequentialism could be a stronger element than is usual today. Physical games should be able to negate to some degree the highly tunnel-sighted challenge-induced “objective achievement syndrome” of digital games, which could emphasize the looser poetic and ethical points of a physical game, as Montola noted.

3.2.4 The game-world is its own set of affordances.

Spatial narratives in computer and video games have been written about by, among others, Jenkins [16] and myself [17]. As Daniel Vella writes: ”By their very nature, however, videogames possess the capacity to grant the embedded narrative site an ontological status that does not depend on the exploratory narrative. To reduce the argument to the point of banality, the gameworld, as a complete, three-dimensional fictional space, exists in its entirety, waiting to be discovered, even if the player proceeds to run in circles in the starting room.” [18] Any game space must be made accessible and usable so that ”[Game environments] are anchored firmly in the player’s inhabiting of, and journey through, the space of the gameworld.” [18] As games like World of Warcraft show, deep connections can be created by building non-linear, spatial worlds rather than dramatically narrating a linear sequence of events. If anything, the inhabiting of this place will make it one’s own in ways impossible with pre-structured ones. Physical games should encourage a structure that is experiential, also in the exploratory sense. Compared to contemporary games, there should be much less need for a linear sequence of events.

4. Current state of affairs – Hardware that may impact future games

Invisible interfaces is not commonplace in the consumer gaming marketplace and there are therefore no ready-made, instant problem-solvers close to hand. We will need to examine the alternatives before looking at a more substantial interface solution.

When the Nintendo Wii [19] was released in 2006, many had hoped that this could mean the beginning of the end (or at least — less dramatically — a major change) for how we interact with games. More importantly, an enthusiasm grew about how this could become a great leap in what types of games might conceivably be created for such a novel interface. The Wiimote does a fairly good job at mapping a relatively accurate move of the arm, basing its reading, most importantly, on acceleration and rotation. Based on hear-say and critical consensus, the PlayStation Move [20], a rivalling product, does very much the same thing, although its later release date (2010) has helped in providing moderately better mapping technologies in the controller. In essence, both are the same — the Wii Remote will stand in for both for the duration of this paper. As time has gone by, critiques have been scorching in the gamer community about the Wii as a sell- out and that its games are more arm-waving contests than anything else.

Microsoft Kinect [21], an accessory for Xbox 360, having sold over 10 million copies between November 2010 and March 2011, has seemingly become more of a favorite among hobbyist electro- hackers and computer graphics artists than in the general audience, as can be seen in the disappointing sales of games for the device. Currently Kinect is where Nintendo has been for some time now, with no high-quality games but the tech to support them. Interface-wise, on the other hand, Kinect is novel: it uses no traditional controllers or physical token devices, but instead makes use of a sophisticated set of two depth tracking cameras and nifty programming to create an internal skeletal model of the player(s). It then reads the bones and their movements to finally perform the correlating actual movements on-screen.

In the less glamorous field of DIY electronics, Arduino [22] has gone from a dream-project that imaged a democratic platform where basically everyone could create novel electronics to being mass-produced and used worldwide as one of the first wide open- source platforms for creating hardware/software hybrids. As the recent documentary film about Arduino explains, the next step is

to encourage the use of the chip in machines that can create new physical objects. This far most ”products” raised out of Arduino have been small-scale custom-built things such as DOTKLOK [23]. The raw schematics are usually free, licensed under open- source (as is the case with DOTKLOK) and are indeed somewhat resemblant of the ”spimes” that Bruce Sterling [24] envisioned: ”In a SPIME world, the model is the entity, and everone knows it. […] Practically every object of consequence in a SPIME world has a 3-D model. Those that were not built with models have 3-D modelling thrust upon them. They are reverse-engineered: one aims a digital camera at the object and calculates its 3-D model by using photogrammetry.” Something like a replicating machine built around Arduino could be a central production asset when practically creating items for my proposed system.

As things stand right now, in 2011, interfaces are nothing but central for the coming generation of games, but it is still very early and hard to predict the next few years, or the upcoming rumoured technologies’ chances for major acceptance in the marketplace even in the short term. With some mild divergences, the television screen is still the optical focus and active playspace that the game companies are targetting.

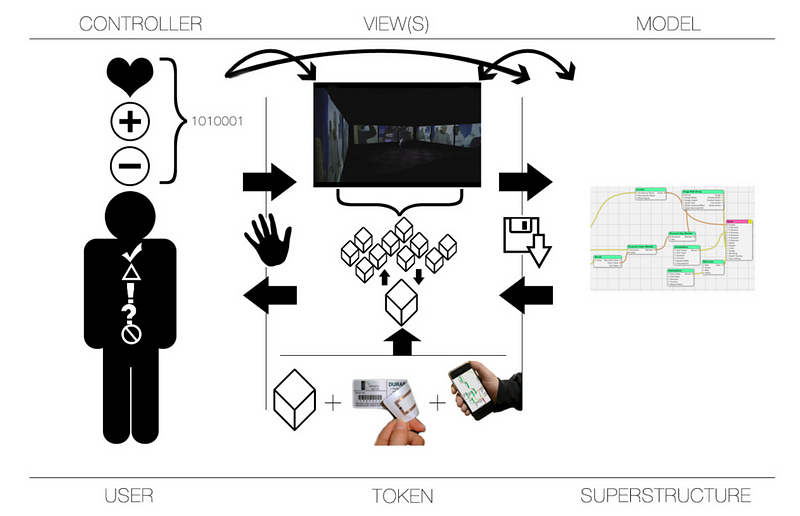

5. An ecology of hardware and software – the system itself

The system uses two modes. Based on their user-experienced state, these are — for the sake of clarity — called ”White Box” and ”Black Box” — the names suggesting modes of usage rather than separate sets of objects and means of interaction. The names are taken from AI research, where ”white box” means that the system is open and exposed, and a ”black box” is closed and rigid. Building, or reflectively using this system, would imply the ”white box” mode, but this turns into a ”black box” once the user- interactor steps into a ”rigged set” of tokens. Being rigged would mean that a collection is staged, as well as being ”rigified”; it is made (semi-)permanent. Each token is its own ”driver”, containing a set of affordances and (re)combinatory ways in which it can be used — it is both inherently separate in its private processes but will, in the range of malleable objects, also impact them. This is the key to the ecological perspective. As soon as it is rigged, the environment is more properly called a ”superstructure” — the map and affordances of the connected tokens within. A superstructure works by augmenting reality through combinations of digital and physical layers. Because the user-interactor has not taken part in the rigging of the closed superstructure, it becomes ”black boxed”: an opaque, closed, totally immersive and invisible setup.

5.1 Invisibility

The black box superstructure is formally based around invisibility. Like Mark Weiser’s notions of Ubiquitous Computing [25], the desire here is to completely veil all involved technologies — especially the mediating devices and remediating screens and other one-way elements. The goal is to facilitate interactions between actual physical objects. The black box is a radical departure from conventional avatar-centric gaming. As Paul Dourish [3] writes about for great lengths, the philosophical underpinnings of interaction design owe much to the thinking of such people as Martin Heidegger and Ludwig Wittgenstein. Dealing with epistemology through ontology and actual bodily interaction, the black box extends from the Heideggerian notion of ”tool usage”. Ergo, the (game) objects vanish (because they are unmediated) into the background and can in many cases be used as 1:1 representations of what they are by having the object in real space. No remote control is necessary in the core concept, but borrowing from Hiroshi Ishii’s concept of input devices that represent both physical and digital domains, it would make sense to somehow digitally enhance the interaction space. On this issue, Dourish writes: ”Another perspective on the problems of invisibility follows directly from the idea of embodiment. Looking at embodiment, intentionality, and coupling shows that the relationship between the user, the interface, and the entities that the interface controls or represents is continually shifting. The focus of attention and action is subject to continual and ongoing renegotiation. A system can take no single stance toward its subject matter; it must allow for this play.” [3] A multi-purpose controller could work, but substantial testing would be required to find an acceptable answer of how to solve the final interface artifacts.

5.2 Visibility

The ”box” itself consists of uniformly sized wall tiles (resemblant of traditional modeling techniques for digital games) are projected with digital images. A cube-formed set of walls can turn into any representation in the matter of micro-seconds. (See PROJEKTIL’s architectural projection mapping [26] or philosopher Slavoj Zizek’s performance on Dutch television channel VPRO for inspiration [27]) A few minor problems may already have been raised by the reader at this moment. What, for example, would happen with depth space information that is projected on a flat surface? Would it not be terribly distracting to continously note the illusory effects at hand? A common-place technology used in 3D graphics is the use of ”normal maps” [28]. These are specialized regular flat images that, once wrapped around an object, can simulate (with the help of color values in the image, or ”texture”) a limited amount of depth information. Seen from acute angles, however, the effect becomes apparent as a trick or visual hoax. A further development of this is radiosity normal maps or RNM [29] which contain lighting information making any such visual artifice much harder to spot while still allowing for even more dynamic light conditions. This would seem to be relatively similar to our problem at hand. The solution I am suggesting is using an internal game representation that mimics at least four separate cameras, using layers that are orthographic (angleless, depthless) as well as distance objects that would go into the normal perspective rendering pipeline. To work with these, some amount of information about user-poitioning would need to be communicated to the server. Head tracking and the user- interactor’s view angle should be fairly easy to capture in real- time. With this information (secondary?) parallax effects could be created, such as moving background geometry if looking ”through” a window. A similar iPad application has already been tested [30]. Because each projector would work by recording/viewing from an orthographic perspective while rendering the walls, as such, the far-away skyline (or other outdoor objects) would be rendered in perspective, with all the necessary rotations required to simulate parallax vision. Combining this with the ever-cheaper 3D support in higher-end televisions and projectors one might even be tempted to apply some degree of depth to the rendered image. This method of envisioning and (re)creating flat surfaces could be used to create extremely compelling and rich visual experiences while remaining cheap and infinitely modifiable. This idea obviously implies that all walls are perfectly flat, but alternate versions could involve spherical rooms or mechanically adjusted walls. Walls could well be some fabric, like giant projectable sheets of overhead projector paper, for example.

5.3 Reprogrammability and reflexivity

The overarching concept is really all about objects being able to take the role of a specific token in a context. A given token can hold different levels of interactive potentiality. These would roughly be in some gradient scale between active (can influence other tokens) and passive (object state is just communicated and tracked). In a game context, a representational-reflective setup (white box) would be better focused on usages similar to non- digital, traditional games. A white box system is modular and fully customizable. Using it like an open white box system emphasizes modularity, bricolage, spontaneity, creativity and non-finite ad- hoc modulations between token states. The process is the center- piece and there is a good chance of the rise of a social layer around the reconfiguration(s) of such a system. ”Reflective” means it visualizes system state, is activated on a per object-basis (it fills no function until programmed) and is openly connected to the other ecological objects (its natural state is connectedness; disconnection is optional). The system is, again, entirely open and possible to reach from an API contained on one or more nearby superstructure facilitators (mobile phones, laptops). Any device that can reprogram tokens is a superstructure facilitator. Games can be based on proximity, acceleration and relative position in ways that are unusual for digital games. Even the idea of tokens is inherited from a rich non-digital gaming tradition and perhaps this ”white boxing” could bridge some of the gap in the divide between non-digital and digital games.

For tracking purposes, the ”box” could be scanned, recorded and reinterpreted or rearranged live via one or more 3D cameras, such as the Kinect. (See Kimchi & Chips’ blog [31]) One should be able, as a room designer, to augment any object to be included in the spatial ecology (via RFID), thus eliminating the need for virtual props. The usage of RFIDs would be restricted to those items that are not (for the purposes of the gamespace) deemed crucial. Still other objects may not at all be tagged in any way, such as pencils or other small, insignificant objects. Such things as important tokens, larger objects and system state-changing items would be more extensively tracked.

Reprogramming via the API will (most likely) be handled from a computer in the guise of a simplified SDK. Simplified programming interfaces of this kind can be seen in Microsoft Kodu [32] (and Alice [33], Scratch [34] — visual programming etc.). Games could be shared between units and (sub)systems, people, techno-ecologies and emerge as results of combinations. It should be possible to replicate practically any other existing non- digital game via this system. The API could possibly be web-based which is now greatly helped by technologies such as HTML5. Coupled with the increasing potency of modern web browsers, even mobile ones, a true multiplatform API would not be an instant pipedream but something worth pushing for. Multiplatform support will be the defining factor in making these environments accessible to people, since the collated rigs themselves will not become affordable for a long time.

5.4 Mobility and interactivity

We also need to recognize the inherent problems involved in current mass-produced technologies such as the Wii Remote and Kinect. In representing X, Y and Z axes, a mapping of the player’s body, the player space (real surrounding space) and the game space (on screen) needs to take place. Any miscalculations, misrepresentations or ”impossible” configurations would mean the player fails to be able to use the game-product in the desired way. Therefore a tangible rather than a fully abstracted mode of interaction is supposed for this proposal. This system takes some cues from the Model-View-Controller (MVC) framework used extensively in some areas of programming. Using MVC, one can differentiate logic, data and representation. There is thus a chain at work:

Physical object (model) — Surface (view) — (Subset[s] of) room ecology (controller)

As Ishii & Ullmer proposed in their conceptual “TUI” (tangible user interface) the controllers would be both digital and physical representations of data. [35] As a short scenario idea, plastic cubes with built-in WiFi could be used together with a multitouch surface to replicate a board game. The surfaces grid size (granularity) and sensitivity is customizable through the API. The cubes have a simple system for working with game mechanics like states and other such rules. The multitouch surface can have graphics replaced, meaning that a tile-based reproduction of something like Settlers of Catan could load external libraries with visuals or other media. As game state changes, the cubes can update their representational layer by, for example, switching colors. This means that the cubes are physical game tokens (“contollers”) and state notifiers (“views”) while retaining their ability to be multi-purpose.

In the case of ”invisible” games, things get more complicated. For as Lars-Erik Janlert reminds, interfaces should have indexical qualities: ”The interface can signal real-world state affairs, but it should not be in the form of proper symbols, [but] rather like indexical signs in nature (e.g. smoke or smell of burning indicates fire).” [36] This would give some ideas on how to inform interactors of their avatar’s “metaperception” (more on this below). A system must encourage the user’s own thinking process (Janlert: ”Tracking reality is not thinking”) rather than merely identifying the actual current state. The current game state can be made explicit via ambient informatics. Narrative elements are physically represented and enhanced via lighting, pacing, smell, audio-visual cues and acting. Gamespace is treated as a narrative device not unsimilar to how ”adventure houses” (such as Swedish Boda Borg [37]) or amusement park rides (and ghost houses) create embodied, direct encounters with a staged environment. Biotelemetry could even make calculations based on stress levels and other variables that are sending input to the superstructure. This is turn would regulate a defined range of factors and signal chains in the event chain (micro-narrative) that is currently active. Therefore every session would be maintained with a complete and utter regard to the user-interactor, thus creating something unique every time.

5.5 Realit[y|ies]

In a recent Master’s level thesis by Fagerholt & Lorentzon [38], the relationship between immersion and GUI was explored. Based on the research conducted by them it seems that meta-perception, that is, the extent to how the player and avatar can communicate unspoken information (for example the avatar’s possibility to reach places the player may not know about since he is not tuned to the avatar’s latent possibilities or affordances) may be the best general-case method of maintaining a somewhat transparent relationship between interactor and avatar. This has the flip side that the avatar is still implicitly present, but acted instead of represented. Such a choice would be made on a case-to-case basis. Again leaning on Ishii and Ullmer [35], I strongly favor a solution consisting of a wide array of ambient informatics — light (modeling and actual recreation of 3D-space representations), sensor-driven feedback, three-dimensional audio, all very subtle cues — to enhance the sense of spatial reading. In creating a game of this type, it is easy to see how film and theater disciplines could be incorporated easily into this structure. Of course, reducing elements could also work well: In the experimental game Deep Sea [39], sensory deprivation and sound-based gaming has proven that immersion through reduction is highly efficient. Its interface, while highly artificial, becomes such an important part of the general experience that it is impossible to replicate it without the gas mask headgear used to interact.

What will all this mean for the notion of the avatar? Can there be such a thing if you are the actual avatar, or rather, the subject being addressed? Rune Klevjer, writing on the various kinds of player-identities in digital games, notes that traditionally these two have been melded together to complete one another. ”So there is no need to ponder the presumed lack of synchronisation between how the telepresent player is experiencing his role in the game world, and what we are told that the playable character is experiencing. The two exist on separate ontological planes. There is no reason to assume, for example, that the heavily caricatured television programmes we can watch in Nico Bellic’ residence in GTAIV are similar to the programmes he is watching, in the world in which he exists as a person. I have no idea what he watches, but I find it very unlikely that it would be anything like those videos.” [40] This brings up relevant questions about the future possibilities to ”act” as an avatar, rather than being ”oneself” in a black box game. Metaperception may, if nothing else, make the use of an invisible system more intuitive. Further studies must be conducted to see how these notions of avatarism should be addressed.

6. Conclusions

At this point in time, some of the crucial, structural factors involved in creating this kind of spatial interface are sketchy and inevitably speculative. Where I believe the main thrust has been, however, is in doing a small excursion in the types of possibilities that open up when digital games can be mixed with real settings, and putting extra weight in factors of emotion, tactile experiences and ”avatarlessness”.

Some of the issues and questions this brings up are:

- The fact that avatars and their roles are radically challenged by this concept

- Cinematic conventions may no longer apply as they have previously done

- The distancing between player and character needs to be re-addressed

- Tactile, haptic experiences do no longer need mediation — how imply something like pain?

- In which ways can we create mid-box objects — furniture, obstacles?

- How extend environments infinitely? It is even possible?

- Does it need human supervision, for example ”game masters”?

- Should it incorporate live actors?

Finally, moving back to the initial question, the title of this paper, can something like this proposed system (an ecological hardware model) give gamers a less-biased mediation tool to experience digital games? Any answer to this is obviously premature, but I think it could indeed highlight a few of the variables — such as situatedness, previously unused senses (haptic, tactile input, smells, ”sixth sense” of spatial collocation) and open variations on performed gameplay — that remain to be introduced in the current variety of digital games. Any attempts at realizing such a project will also need to take some kind of stance in how this is either AR, MR, purely digital or theater or even a localized ARG.

7. References

[1] Wikipedia: Normative Ethics, 2011. Retrieved May 13, 2011. Wikipedia: http://en.wikipedia.org/wiki/Normative_ethics

[2] Juul, J. Half-Real: Video Games between Real Rules and Fictional Worlds. MIT Press, Cambridge, 2005.

[3] Dourish, P. Where the Action Is: The Foundations of Embodied Interaction. MIT Press, Cambridge, 2001.

[4] Bogost, I. Unit Operations: An Approach To Videogame Criticism. MIT Press, Cambridge, 2006.

[5] Bogost, I. Persuasive Games: The Expressive Power of Videogames. MIT Press, Cambridge, 2007.

[6] Bogost, I., Ferrari, S., and Schweizer B. Newsgames: Journalism at Play. MIT Press, Cambridge, 2010.

[7] Copenhagen Game Collective: B.U.T.T.O.N. Retrieved May 20, 2011. Copenhagen Game Collective: http://www.copenhagengamecollective.org/b-u-t-t-o-n/

[8] Frasca, G. Simulation vs Narrative. in Wolf, M. J. P. and Perron, B., The Video Game Theory Reader, Routledge, New York, 2003, 221–235.

[9] Parker, F. In the Domain of Optional Rules: Foucault’s Aesthetic Self-Fashioning and Expansive Gameplay. In The Philosophy of Computer Games Conference (Athens, GR, 2011). http://gameconference2011.files.wordpress.com/2010/10/poc g_foucault_paper_draft3.pdf. Retrieved May 20, 2011.

[10] Quantic Dream. Heavy Rain. Sony Computer Entertainment, 2010.

[11] Toft Norgaard, R. The Joy of Doing: The Corporeal Connection in Player-Avatar Identity. In The Philosophy of Computer Games Conference (Athens, GR, 2011). http://gameconference2011.files.wordpress.com/2010/10/thej oy1.pdf. Retrieved May 20, 2011.

[12] Montola, M. The Positive Negative Experience in Extreme Role-Playing. In Nordic DiGRA Conference (Stockholm, SE, 2010). http://www.digra.org/dl/db/10343.56524.pdf. Retrieved May 20, 2011

[13] Sicart, M. The Ethics of Computer Games. MIT Press, Cambridge, 2009

[14] Freire, P. Pedagogy of the Oppressed. 1970.

[15] Boal, A. Theater of the Oppressed. 1993.

[16] Jenkins, H. Game Design as Narrative Architecture. In Wardrip-Fruin N., and Harrigan P. eds., First Person: New Media as Story, Performance and Game, MIT Press, Cambridge, 2004, 118–130.

[17] Vesavuori, M. Cutting the Master’s Strings: Identity and Drama in the Simulated Spaces of Games. http://www.propaganda-bureau.se/media/Mikael_Vesavuori- cutting-the-masters-strings.pdf. Retrieved May 20, 2011.

[18] Vella, D. Spatialised Memory: The Gameworld as Embedded Narrative. In The Philosophy of Computer Games Conference (Athens, GR, 2011). http://gameconference2011.files.wordpress.com/2010/10/spat ialised-memory-daniel-vella.pdf. Retrieved May 20, 2011.

[19] Nintendo: Controllers at Nintendo (Wii), 2011. Retrieved May 13 2011. Nintendo: http://www.nintendo.com/wii/console/controllers

[20] Sony: PlayStation Move Motion Controller. Retrieved May 20, 2011. Sony: http://us.playstation.com/ps3/playstation- move/

[21] Microsoft: Kinect för Xbox 360. Retrieved May 13, 2011. Microsoft: http://www.xbox.com:80/sv-SE/kinect

[22] Arduino: Arduino. Retrieved May 13, 2011. Arduino: http://www.arduino.cc/

[23] Andrew O’Malley: DOTKLOK by Andrew O’Malley. Retrieved May 13, 2011. Andrew O’Malley: http://www.technoetc.net/dotklok/

[24] Sterling, B. Shaping Things. MIT Press, Cambridge, 2005.

[25] Weiser, M., and Seely Brown, J. The Coming Age of Calm Technology. 1996.

[26] BlenderNation: Miniature Architectural Projection Mapping. Retrieved May 18, 2011. BlenderNation: http://www.blendernation.com/2011/05/18/miniature- architectural-projection-mapping/

[27] VPROInternational: Living in the End Times According to Slavoj Zizek. Retrieved May 18, 2011. VPROInternational: http://www.youtube.com/watch?v=Gw8LPn4irao

[28] Wikipedia: Normal mapping, 2011. Retrieved May 25, 2011. Wikipedia: http://en.wikipedia.org/wiki/Normal_mapping

[29] Green, C. Efficient Self-Shadowed Radiosity Normal Mapping. In SIGGRAPH (San Diego, USA, 2007). http://www.valvesoftware.com/publications/2007/SIGGRAP H2007_EfficientSelfShadowedRadiosityNormalMapping.pdf . Retrieved May 25, 2011.

[30] Macstories: iPad 2 Head Tracking + Glasses-free 3D App Now Available, 2011. Retrieved May 25, 2011. Macstories: http://www.macstories.net/news/ipad-2-head-tracking- glasses-free-3d-app-now-available/

[31] Kimchi and Chips’ blog: Kinect + Projector experiments. Retrieved May 13, 2011. Kimchi and Chips’: http://www.kimchiandchips.com/blog/?p=544%3Cbr %20/%3E

[32] Microsoft: Kodu — Microsoft Research. Retrieved May 20, 2011. Microsoft Research: http://research.microsoft.com/en- us/projects/kodu/

[33] Carnegie Mellon University: Alice.org. Retrieved May 20, 2011. Carnegie Mellon University: http://www.alice.org/

[34] MIT Media Lab: Scratch | Home | imagine, program, share. Retrieved May 20, 2011. MIT Media Lab: http://scratch.mit.edu/

[35] Ishii, H., and Ullmer, B. Tangible Bits: Towards Seamless Interfaces between People, Bits and Atoms. In Proceedings of CHI ‘97.

[36] Janlert, L-E. The Evasive Interface: The Changing Concept of Interface and the Varying Role of Symbols in Human- Computer Interaction. In Jacko, J. ed. Human-Computer Interaction, 2007, 117–126.

[37] Boda Borg: Boda Borg — Roliga skolresor, Kick Off, Äventyr & Coaching Teamutveckling. Retrieved May 13, 2011. Boda Borg: http://bodaborg.se/

[38] Fagerholtz, E., and Lorentzon, M. Beyond the HUD: User Interfaces for Increased Player Immersion in FPS Games. Chalmers University of Technology, 2009, MA Thesis.

[39] WRAUGHK Audio Design: Deep Sea. Retrieved May 20, 2011. WRAUGHK Audio Design: http://www.wraughk.com/show.php?title=DeepSea

[40] Klevjer, R. Telepresence, cinema, role-playing. The structure of player identity in 3D action-adventure games. In The Philosophy of Computer Games Conference (Athens, GR, 2011). http://gameconference2011.files.wordpress.com/2010/10/run eklevjerathensfinalpdf.pdf. Retrieved May 20, 2011.