Election time: Do Swedish political parties’ websites keep their User Experience promise?

Read on about how parties fail to deliver good technical user experiences to their users, which is alarming in terms of bad performance (increasing data costs), security and accessibility.

Sweden is holding its next general election on September 9th. It is expected to be a drama of big proportions as the political field has been moving both quicker and more unexpectedly than many have been prepared for since last time people went to vote in 2014. The greater political climate is also affected by the presidency of Donald Trump, the re-activation of the climate change issue (where Sweden made international headlines because of rampant wildfires in July), and an increased urgency around election hacking or the threat of it. Hacking, as well as deliberate misinformation and “shaming”, has been doing the headlines in the last days as the Social Democrats got hacked twice in a very short timespan.

Att hemsidan blivit hackad nådde Socialdemokraterna vid klockan 11 på måndagsförmiddagen, sedan dess ligger sidan nere…www.expressen.se

We might say and think whatever we want about corporations and start-ups and whatnot doing “the right thing” by providing snappy and responsive services to customers. But things quickly turn into a question of social justice and transparency when and if a political party cannot represent itself in a decent manner because of someone tampering with the site, by making it hard or impossible to view the (correct) content.

The “right thing” is to be fast, accessible and secure. Even more so if you run the website of a political party in a democracy where almost everyone is online.

With this backdrop I decided to do some auditing of all the “big 9” government parties and their websites. Tools used are Google Chrome, Lighthouse and Mozilla Observatory. My assumption has been that the parties want to have modern mobile-responsive, quick, accessible sites. Let’s see how that assumption holds up, shall we?

The parties examined

- Vänsterpartiet

- Feministiskt initiativ

- Miljöpartiet

- Socialdemokraterna

- Centerpartiet

- Liberalerna

- Kristdemokraterna

- Moderaterna

- Sverigedemokraterna

Factors examined

- Performance

- Accessibility

- SEO

- Security

- Images

Want to see the data?

Data is available at https://docs.google.com/spreadsheets/d/181NMOZKU6tL6TQN1-MGC7xRZr5BxBGclgEsLNg5D2wE and a ZIP file (20.1MB) containing screenshots and Lighthouse reports can be found at https://drive.google.com/file/d/1vtLJDusWOIwuPwGedWD3zPZVBGmtpS2V/view?usp=sharing.

Notes on testing

Lighthouse testing was done on a full-spectrum audit (all 5 audit types) on an “Applied Fast 3G” throttling setting, which emulates a Nexus 5X mobile phone. The browser I tested with was Chrome 68 with the built-in Lighthouse 3, using a dedicated no-extension profile that should have zero impact on network metrics. Chrome network metrics, in turn, also used the “Fast 3G” throttling profile.

I’ve run tests several times during the week of August 20th to 24th, and page sizes and some metrics have fluctuated a bit. This is to be expected.

Let’s get it on!

Analysis

What systems do the parties use?

Content Management Systems that the parties use seem to be Episerver, Sitevision, but most commonly WordPress. We are still talking old-fashioned CMSes, no headless stuff.

Performance

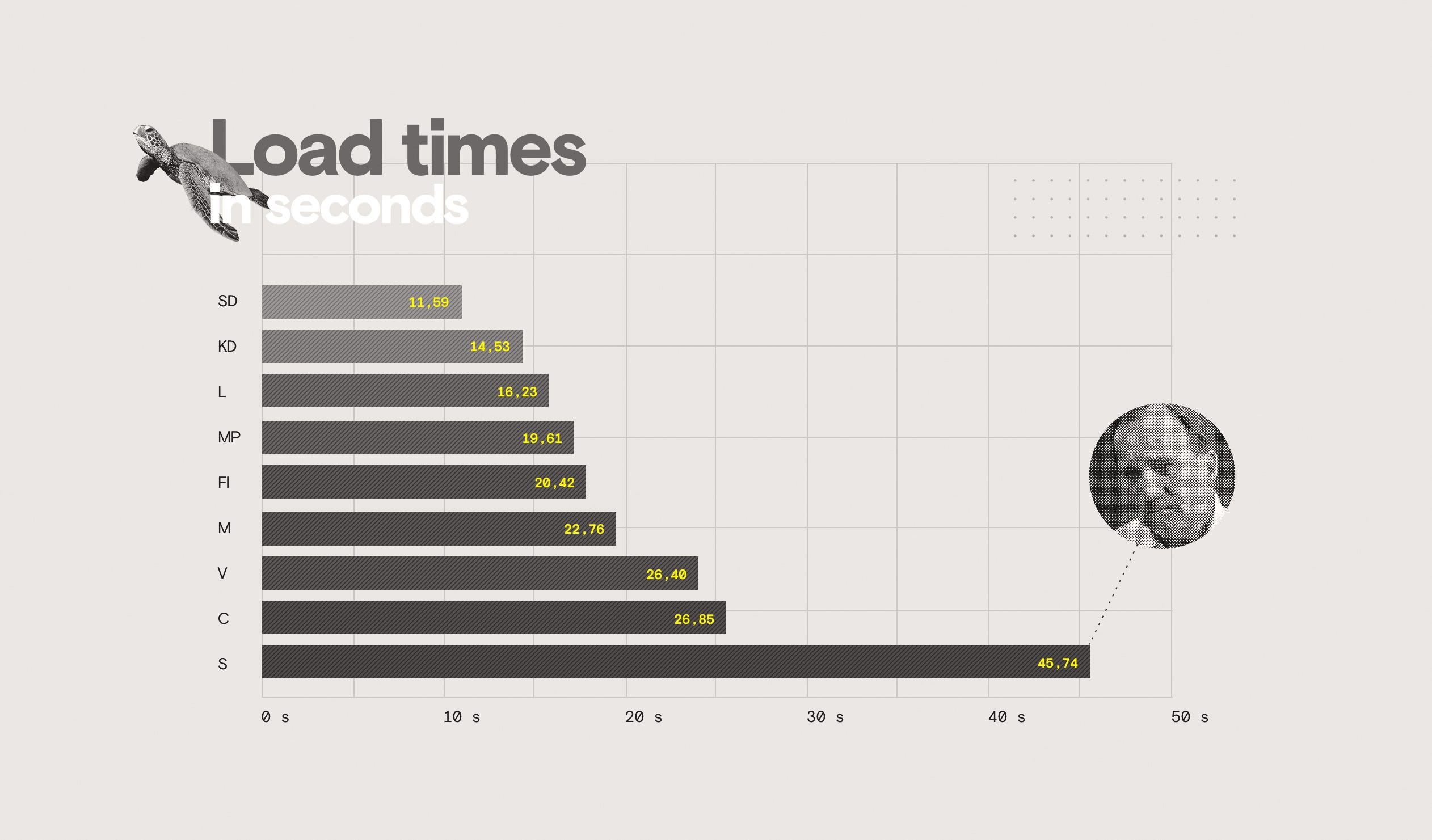

Slow sites are plentiful. Not only does a slow site more often than not get shut down, they are also not very courteous to visitors.

Hoarding bytes means that your user, and potential voter, will–quite frankly–have to pay more money than necessary just to view your content. Not very nice, is it? The bigger your transfer size, the bigger the toll on the user’s wallet and patience. In the corporate sector, transfer size and content-to-glass speed is directly connected to revenue and making people care about what you do. This does not change in politics.

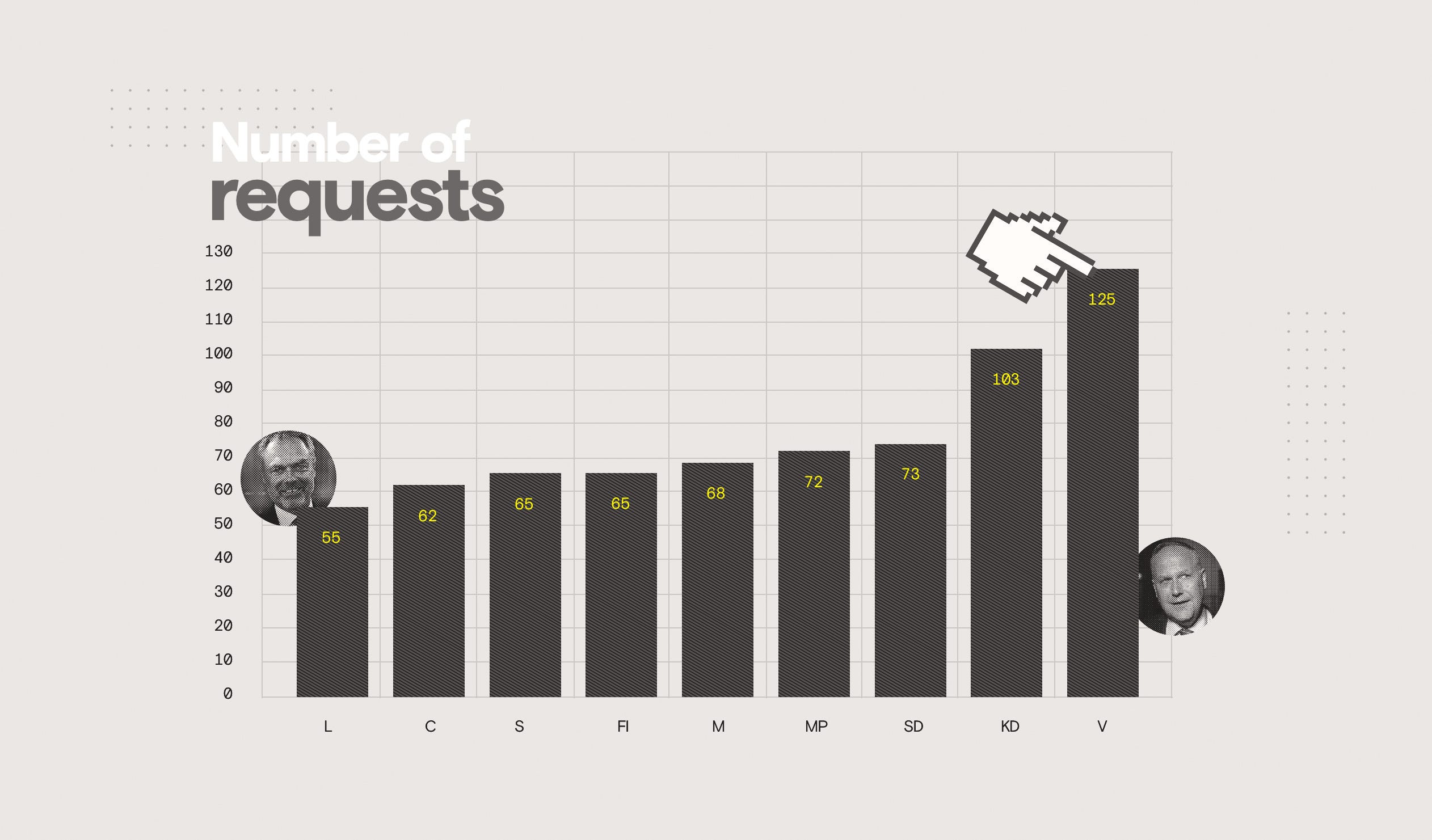

There’s a wide degree of variability in how well caching and other performance-affecting aspects are implemented. Pingdom Tools, which I also took out for a quick run, did give pretty good scores on many items. Lighthouse, though, is harder to please. One key factor for any decreased scores in these two tools is because of using scripts external to the primary domain. Those scripts become impossible to affect, and the only reasonable way to improve this is by self-hosting scripts and making sure that the own server is going full blast on all the performance bits.

Repeat visits were browser-cached but still hampered by, often, very large request counts. On a fiber connection the repeat load time could be almost the same as a first visit because of external requests and –what I believe is–browser congestion. Too much going on, in short.

On a lesser browser I don’t know how well the caching would fare, especially since the scoring was sometimes poor on this aspect. The best sites in this regard—Socialdemokraterna and Sverigedemokraterna—were still around 30KB. Not much by itself, but using a Service Worker-cached version should be able to hold it down in the sub-1kb territory and immediately improve reload speed since you’d not need to request the resources again at all. Note than none of the sites use Service Workers to do such modern client-side caching.

Winner(s): Miljöpartiet and Liberalerna are the only ones with a 70+ score. Honorable mentions to Vänsterpartiet and Feministiskt initiativ who almost made the cut.

Accessibility

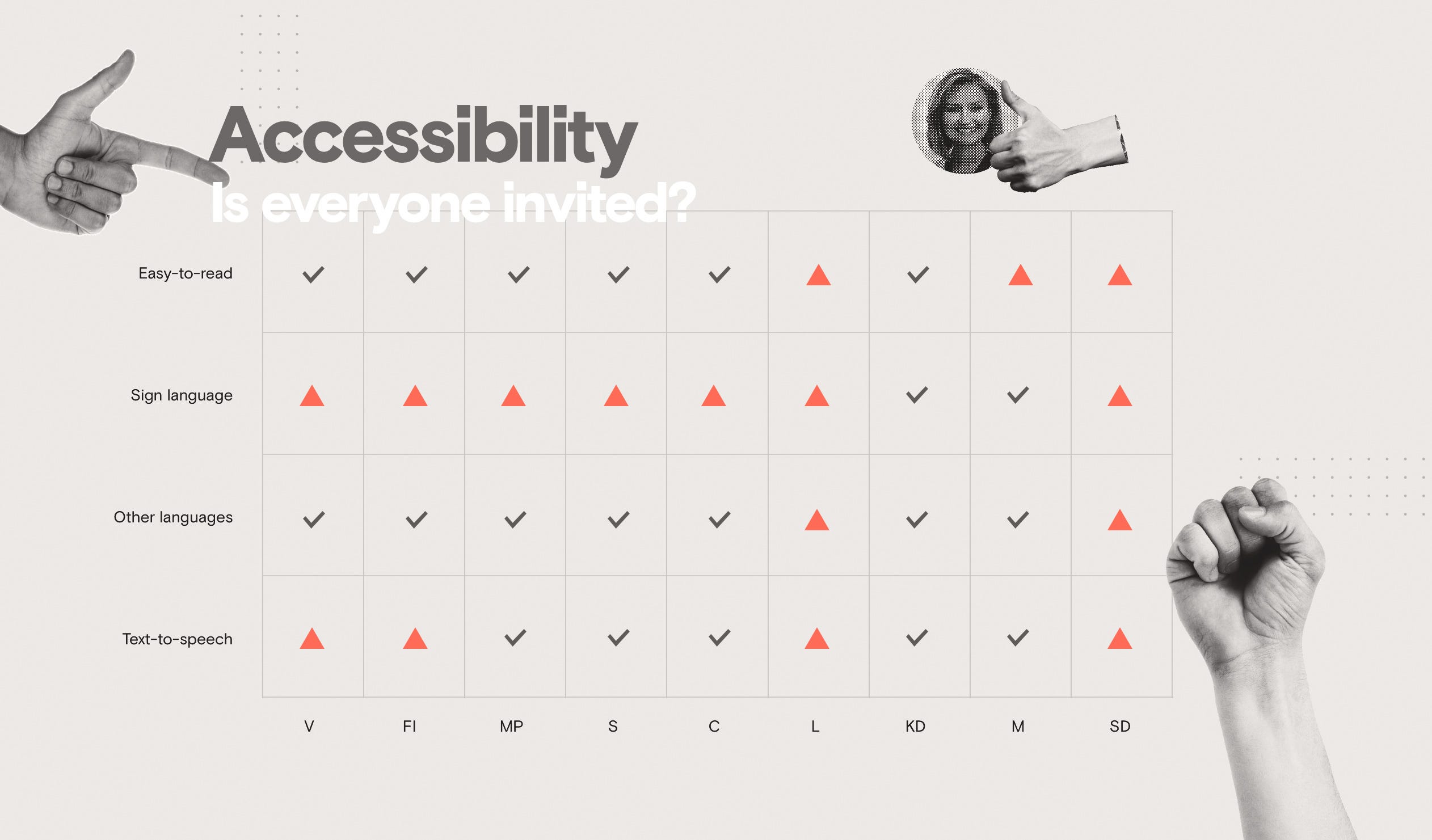

Accessibility is very often neglected. This is weird since there is such a great degree of variance in ability among people, and since the sheer number of people who would benefit from even simple improvements is so vast. Luckily, there is a lot of good to be said here, at least in attempts to address this issue.

Note! Lighthouse’s automated accessibility ratings are different from any qualitative, manual evaulation that also may have to take content quality into account. What I’m reporting is thus only the metric score and the existence (or not) of certain accessibility functionalities.

Moderaterna and Kristdemokraterna have what I believe are the only sign-language specific versions of some of their content. It’s also common to have “lättläst” (easy-to-read) versions: only Moderaterna and Sverigedemokraterna didn’t have this, from what I could find. The possibility to listen (via various “text-to-speech” plugins) was found on 5 out of 9 sites. I can’t say I have any big experience with text-to-speech but it was clear that these tools could themselves use some UX work. The implementations of these interfaces was at best “so-so” and in the case of Kristdemokraterna the site even came to a grinding 403 halt when I tried using it.

Shame points go to SD and Liberalerna who are exceptional in that they provide no accessibility functionality what so ever. Not OK!

Accessibility is an area that’s very easy to scan and automate to find markup/structural issues with. Even if some accessibility improvements may touch on the eccentric and obscure taking home a score of 80+ shouldn’t be a problem for a half-decent developer.

Winner(s): Centerpartiet and Sverigedemokraterna get 70 and 71 points. Ironically SD get the highest scoring, yet they add nothing in additional (meaningful) functionality to their site. This only further solidifies the point that this particular metric is hard to accurately score based on an automated process that’s purely about structure.

SEO

Almost perfect across the board, at least from the Lighthouse scores.

My cynical side tells me SEO was more important than accessibility. Both areas are about as easy to improve. Do it.

Anyway, good job, mostly.

Winner(s): Everyone gets a 90+ score, except Moderaterna who still manages a respectable 82 points. Obtaining a perfect 100 shouldn’t be too hard for anyone as extracurricular activity after the “big, real” other issues.

Security

All sites get F on Mozilla Observatory except Feministiskt initiativ and Moderaterna, bringing home a D and D+. Thankfully, all seem to use HTTPS domains, so at least that’s a good thing.

A big reason for the prevalence of F’s in this department is because of poor headers, which involves Content Security Policy (CSP), a fairly newish way of specifying allowed content on a domain. Among the results some things however were worse than others. Redirecting to one’s HTTPS domain is a minimum which many don’t do. Many also accept external domain content served as insecure HTTP traffic which results in a whopping total of -50 points in Mozilla Observatory. Other things that the headers take care of is minimizing the risk of various man-in-the-middle hacker attacks and cross-site scripting hacks. Good headers plug holes and also make it harder to “legitimately” use third-party scripts. A (mostly good) side effect of this is that you will need to start having more resources (scripts etc.) on your own domain.

This piece of the puzzle is—with the exception of CSP that may require a bit more head-scratching to implement without breaking your site—a simple matter of adding common, minimally-tolerant headers to the server. In 5 minutes of work a site should be able to lift-off from a miserable F to a solid B rating. For some help with generating a CSP, there’s this handy generator.

Winner(s): No one. They are all garbage. Winner of the “Least bad” award goes to Moderaterna and Feministiskt initiativ.

Images

The biggest part of a site’s payload is almost always its images. An image can easily consume megabytes of data on its own. An upside is that photographic images add very little computational complexity, so they won’t directly affect render time or battery. However, their size will be a bandwidth issue which will be a bottleneck in adverse conditions, like a mobile user in the outback. It’s also the area where the biggest savings can be made. Expect perhaps 70% of your payload to come from images. Going through with a conservative shaving of 50% (yes, that’s conservative) from total image size would therefore by itself remove 1/3 of all bytes transferred.

Let me give a concrete example of how to improve UX by handling images correctly. Original size for the three images used at the Liberalerna site is 629 KB (combined). When run one pass through ImageOptim they came down to 166 KB—weighing 26% of their original size without any too severe visual degradation. So even if the Liberalerna site is tiny and rather speedy, the images there are several times bigger than they should be: The site could go from mostly right to being totally ace at 282 KB total. Remember, there is no “minimum size” for a website. If you can do what you need in 282KB, there is absolutely no need to believe the ~750KB current size is better. Shave! And never look back. The shaved stuff is trash, not gold.

No one except Socialdemokraterna (read below) seem to use modern image services like Cloudinary which makes it possible to query a specific size rendition of a given image, together with a specific compression quality. This is absolutely brilliant, as it removes the hassle of exporting various size images as content editors have been forced to do for a long time. With that in place, images can be requested with relevant size and quality parameters directly from code. Doing it from code also makes dynamic fetching of just the right size image possible in real-time, rather than providing one image which is either way too big or goes the middle-way of being at best acceptable on most screens.

Another example: Socialdemokraterna has about 6.6MB of 7.2MB total payload size dedicated to images (4.8MB out of 5.3MB in a more recent trial, don’t know exactly why the difference occurred?). They do use parameterized “smart” URLs, for example: jobb_640px.jpg?width=688&quality=60. That last bit with “?width…” in their system is what tells us the size and quality of the requested image. However, a bunch of rather big images retrieved from an external source (Flickr, it seems) are oversized and undercompressed, adding considerably to the page download size. Even more troubling is that the smart URL querying is not completed with—from what I can detect—fully functional responsive image sizes. Regardless if I load the site with a tiny viewport or a full-screen view, I get the same 4.8MB of images. Caveat: This may be a system thing, since the markup tags do look proper from a quick glance, but the Network panel is just not responding as expected—a mobile user should get significantly less image data because of the smaller screen.

Lazy-loading off-screen images should also be an absolute minimum these days, but I haven’t seen this on any site. Don’t show images that are off-screen, easy as that. Implementation is a snap with any of the available (light-weight) libraries out there.

Winner(s): Socialdemokraterna, who still should look into why the grab external images and why the responsive images seem to not work. For the others, the user is still provided “dumb” images which are often way too big, with no or little compression. Not OK.

Suggestions

What is the “sweet spot” to aim for?

For performance, some reasonable pointers are:

- The fastest bytes to deliver are the ones that never need to be delivered: Remove unused parts (and dev-specific code), the rest should be heavily minified and delivered with HTTP2 using gzip or brotli/zopfli compression. Page weight is directly related to the user’s data plan cost, and you are therefore responsible for doing your part in keeping costs down.

- If something is loaded once, it should never be a “tax to be paid” again. Cache everything — reloads and revisits should be immediate and carry as close to a zero-byte cost as possible.

- If the user doesn’t see it, they don’t want it (nor need it) loaded. Use patterns like route splitting and lazy-loading to avoid loading unnecessary bytes.

I’ve written on the issue of performance in greater detail previously this year. Consult the article if you want to get knee-deep into the nitty-gritty!

User Experience and tech meet in many areas in any given digital project. One of the most important cross-over areas is…blog.prototypr.io

Similarly, for security, I would count the below as a good baseline:

- Distrust -everything- both internal and external to your domain and only make exceptions for the very exact things that should be allowed (a so-called “whitelist policy”).

- Security is not just for you, it’s for the user too. Misusing this trust is therefore a violation you inflict on your user as well.

- Reduce the number of moving and exposed parts. Sensitive information behind logins, such as FTP, and well-known targets like WordPress are extremely vulnerable and should be replaced with other alternatives. Private or small-scale servers are also ripe for attacks and suffer from not having big backbones that can defend them.

What specifically could the parties do?

Below I will list some of the grander, more over-arching things I that would make immediate practical difference.

- Use static hosting to minimize your attack surface. Services include Netlify, Firebase Hosting, Amazon S3, or Zeit Now. With these you can do atomic rollbacks so if you are ever hacked, you can just go back to your last set of files. A static host entirely sidesteps the problem of someone destroying or tampering with your files.

- Run frequent tests, especially during development, to audit performance, security and accessibility. Running them often makes problems visible quicker and helps resolve issues faster. Good tools include Lighthouse, Mozilla Observatory, Security Headers, GT Metrix, Sitespeed.io, and pa11y.

- Consult the Infosec subsite at Mozilla which has details on both technical and human-to-human aspects of security.

- Use a serverless backend like Amazon Lambda functions or Google Cloud Functions to make it harder to attack key functionality and harder to pinpoint where the server is and what it does. Also, you get the upside of avoiding to handle your own server security.

- Use a headless CMS (such as Contentful, Prismic, or Sanity) together with automated content backup in order to let the service provider cover security concerns.

- Use Cloudflare or another service that does good DDOS deflection.

- Use a Content Delivery Network (CDN) to deliver content faster, and consider sharding out to CDNs that may pose less risk of attack. CDNs are often provided as part of a static hosting service.

- Conduct normal production code hygiene: minify and bundle (consider Webpack) all code to make page loads much faster; strip all console logging (etc.) from your public code.

- Use Snyk or some other vulnerability detector to find dependencies that may pose security risks.

- Stop using harmful third-party code for things like undue tracking, analytics, and beacons—at the very least minimize and make sure it’s GDPR compliant so zero tracking happens without explicit user consent. “Vanity packages” with unnecessarily heavy features (common with calendars, carousels, and the like) should be removed and replaced with modern, small alternatives, then bundle and serve code from your own domain. If you DO use external scripts, for example from a CDN, give it a Subresource Integrity hash.

- Use a rigid Content Security Policy and set long-expiry caching headers. Take a look at Hisec for a starter that provides an A+ level boilerplate.

- Use a modern image service like Cloudinary, or a CMS like Contentful that provides parameterized images (so you can fetch compressed, correct-sized images in your code with no work from a Content Editor).

- Avoid jQuery which is bloated and a major security risk. Use a fast, modern rendering library like React, Vue or Angular 5 instead. If you’re not using a rendering library, switch jQuery for plain vanilla Javascript.

- Don’t keep data, if at all possible. Avoid FTP and any other file management systems that are basically open holes into your private files. Consider sharing files via cloud services with serious credential management, or send encrypted short-lived links for regular file transactions with WeTransfer.

- Use service workers together with pre-caching to dramatically improve reload speed.

Political parties are vital components in a representational democracy as they are the key symbolic instances that represent the people and its opinions. By adhering to modern, and strict, guidelines for technical UX, parties can better withstand assaults from criminal or anti-democratic forces (not to speak of trolls) and continue to deliver correct information.

With that said, this is hoping that we get less of hacking and bad party websites!

Many thanks to Johan Gunnarsson, a designer at Humblebee, for the illustrations to this article.

Mikael Vesavuori is a Technical Designer at Humblebee, a digital product and service studio based in Gothenburg, Sweden. Humblebee has worked with clients such as Volvo (Cars, Trucks, Construction Equipment), Hultafors Group, SKF, Mölnlycke Health Care, and Stena. Our design sprint-based approach and cutting-edge technical platform lets us build what’s needed.