Who Benefits From AI in Software Organizations?

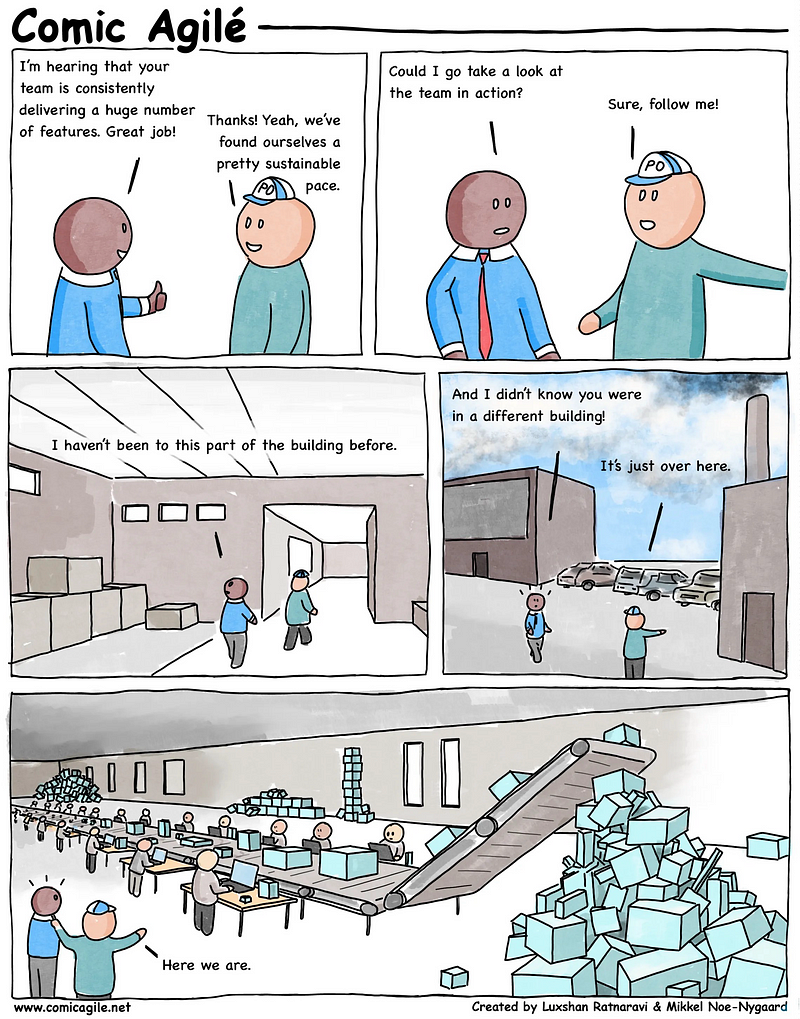

There is a very real danger of your increase in AI-boosted speed only meaning faster churn in the feature factory.

This will be the tale of two schools of thinking around introducing generative AI in software-producing organizations—essentially as workforce replacement or delivery-enhancer—and my thoughts on those same directions.

I’ll put it all together at the end and we’ll all be merry and have learned something.

“Last to the bottom is a broken and ravaged company! Oh, right, that’s all of us…”

The first school of management thinkers will be inclined to think: Hey, with AI, I can now get X times/percent less staff and produce the same amount of output. Like it was an assembly line because that’s how software is made…right?

True, one of the immediate effects of AI is that it makes code (as a whole) easier and faster to produce. In a culture driven by output over outcome, quantity is king. The question is, of course: Is that really what we want? No. It’s not. And it’s hardly controversial to say it. But if you, like me, sometimes feel trapped by business being driven by busyness anyway, then of course something that makes you output more is like a boon from the heavens. Suddenly everyone is a glorious 10x engineer!

I’ve already written several times about my experiences with organizations — being heavily reliant on their IT capabilities and staff — not really understanding and leveraging these things, thus undermining their own future with poor decision-making and strategies that make value-generation practically impossible through IT. It’s a truly unloving relationship where the mouth is happy to bite the hand that feeds it and then deride the hand for being there.

For developers, it’s clear that improved developer experience is a positive effect that various reports are showing that AI can support. I’m definitely friends with the developer experience concept and champion it as much as I can, but what is at the heart of these positive feelings?

From my own experience—and talking to others doing AI-assisted coding (damn near everyone does it now)—the greatest benefits tend to be seen around the boring, ever-present parts of coding drudgery. Think about activities such as generating bits of code that have low overall intellectual value, such as boilerplate, or when we need to scaffold things like bits of explanatory text. Basic automation can do these things, sure, but in that case one needs to understand the situation, think up the process, code it, test it, and so on. Generative AI, like a person, can fill in the blanks quickly where general-purpose programming cannot. Yet, generative AI is best at taking care of the things that the human brain is too expensive to use for.

It’s still a far cry from the Terminator or superintelligence.

I don’t fear the nagging, incessant doomerism around the artificial intelligence evolution. What I fear is more akin to this unseen, creeping dread, kind of what happened to the agile movement:

The high-pressurized feature factory with the AI-provided Midas touch accelerating the factory to as-of-yet new, unknown, and unprecedented heights (and misery).

If your current place of work emphasizes output over outcomes, then yes, I believe you should be afraid of being replaced.

The same goes if the relative communicative and intellectual complexity of what the humans do is low, as well as if the problems the humans deal with are already well-understood. Then they’re probably smoked in the next few years, too.

The irony, of course, is that — as a commenter on one of my LinkedIn posts noted — the market wanted more code, and so now they have it. An infinite amount of code, essentially for free. Hooray, rejoice!

Lose-lose proposition

One of the big problems with software engineering as a pseudo-discipline is that there are too few competent people to meet the current demand for their skills and there is too little meaningful reason for many developers and code monkeys to upgrade themselves to well-rounded software engineers, in the proper distinction. If their organizations aren’t even asking them, then why would they?

Right now, it’s the “developer’s market”, meaning professionals have little actual reason to improve their skills to stay on the curve: People can earn good money with quite mediocre skills.

Call me cynical, but I think a lot of people — for a few years forward — will simply equate generative AI with “more money in the bank for doing less work” and be happy to clock a few more hours watching anime on Netflix each afternoon. And companies will start equating that same generative AI with higher output (“pseudo-productivity”) and employ/hire fewer developers as an effect of drawing the very same conclusions. Status quo retained after a short-lived tussle.

So, about that volume/quantity thing…

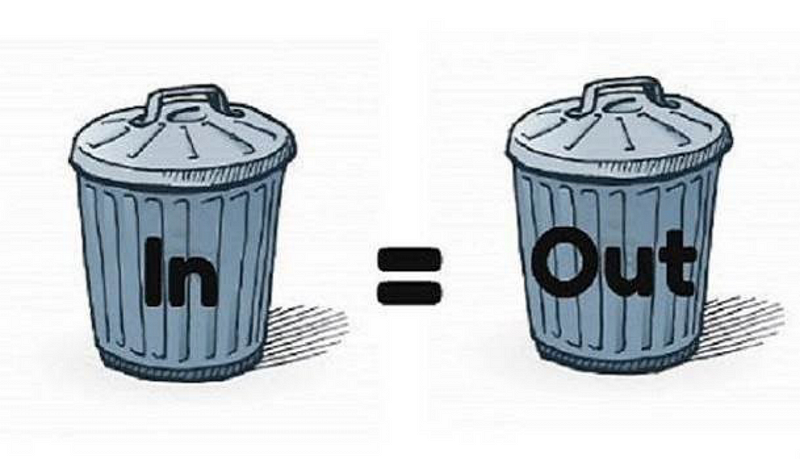

Good engineering practices have taught us the hard way that:

- Fact #1: Better engineers aren’t better because they type faster.

- Fact #2: Better code isn’t better because there is more code.

Which leads to further insights:

- Fact #3: Code is a liability and you want as little as possible of it.

- Fact #4: The more code you have, the better baseline processes and automation you want around it (security, legal, delivery…).

The less mature and agile one’s organization, the more the news of these last facts will knee-cap it as the software real estate grows like Japanese knotweed. Without good practices in place, there still hasn’t been a way to truly learn these lessons — effectively under such conditions people will be very interested in getting a code-churning machine that will work for peanuts.

Sadly, just like in your favorite post-apocalypse fiction, everyone loses at the end of this story.

Accelerating good practices and making better software

The second kind of management thinker will go: OK, this means I should raise the overall quality (as needed and appropriate) with the extra productivity we gain.

That’s a better, but not flawless, direction.

Not all organizations are as dysfunctional, of course, as we just looked at. Not all developers or software engineers have their highest dream being churning out yet another boilerplate WordPress site — they see beyond and understand that the profession requires a broader skillset.

What I hope the GenAI revolution will bring is better conditions in which to make developers into engineers and consequently take the relative software quality up a step (or two, or ten).

Organizations are their people. As the ancient Chinese proverb goes…

So:

- Assuming we are getting the right people;

- that we are giving them ample opportunity for learning and training;

- and we truly want to give them AI assistance to afford more time for qualitative work,

then we at the very least need to know how we should use AI in our organization and enable people to use the technology. Even at its best, generative AI, while fantastic in many ways, will likely still require supervision and inspection. It would be wise to give people some of those skills.

At this point, there is still a clear balance between the human and AI.

However, let’s look into the crystal ball for a bit and also imagine a quite real (near-future) scenario in which generative AI has a significant skills advantage over our human developers.

When we reach the point of the skills gap being so huge as to completely overshadow the human in this relationship, then perhaps we will have to start creating processes and mechanisms that verify the assertions of the code produced by the LLM, because the human can no longer do so — at least without a major investment in time and effort.

Normally when we get to that kind of skills gap in other scenarios there would be some form of skill sharing or competency transfer happening. When the generative AI grows better than the human staff an option would be to let it act as a teacher (let’s skip the stuff around whether or not it’s correct etc. for now) and start improving the rest of the group or organization. It would move beyond just performing the labor, to actually supporting the creation of new skills.

How would that look, when it’s a machine doing the teaching? What would the mechanisms for training look like, when the machine trains the human?

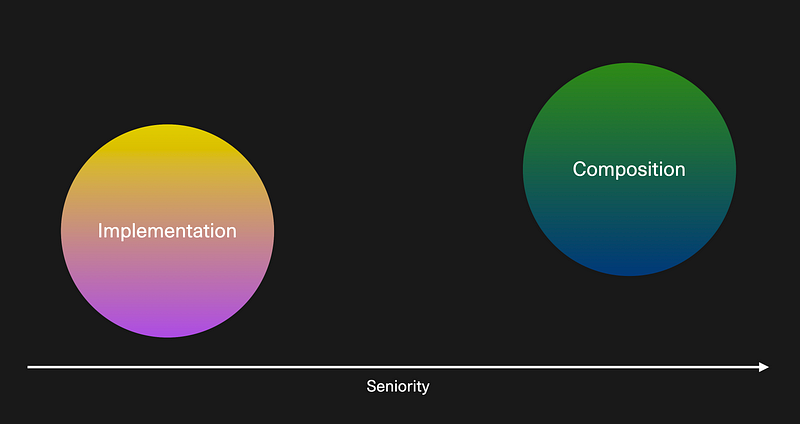

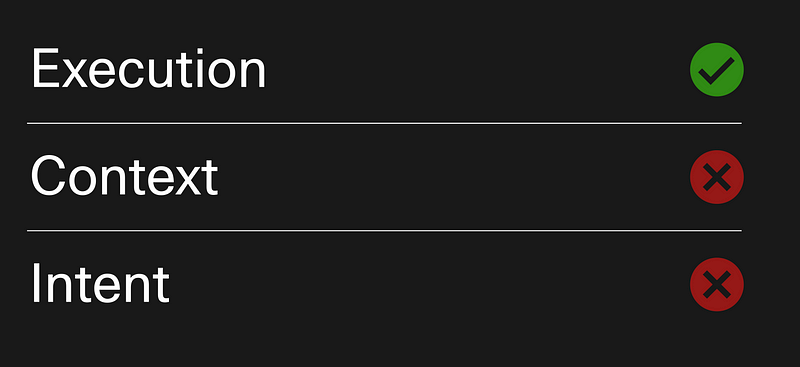

If AI is able to enhance the capabilities of any given developer, then it has to do so in a way in which the developer spends more time where they are already poor: Problem decomposition, solutioning, practical architecture questions, communication, diagramming, software design… and all the rest that, together, make up the well-rounded “engineer” versus the plain white-sugar “developer”.

Some examples pertaining to software engineering coming up.

Seniority means going up in abstraction level, taking in concerns like composition. Bad design should be a sin, but it’s hardly uncommon. Being truly good at this job means designing well.

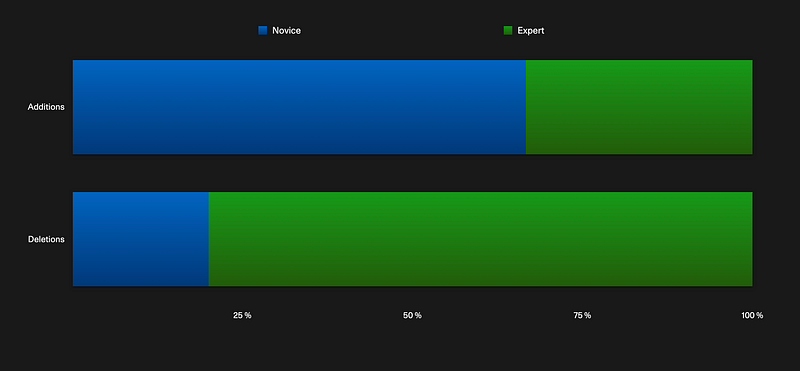

Experts often delete more than they add, and they do refactoring as a natural part of their work. Making the codebase and surrounding artifacts more intentional, expressive, and truer to the needs is central to seniority in competent software engineering.

AI does not easily cover senior-level concerns, at least out of the box, in 2023. Using prompt engineering we can absolutely support some bits of that, but it’s also partly a problem of limited tools and context windows—they just don’t support reasonable, good interaction modes for vast information spaces yet.

But what if the AI tools soon could also look at the big picture, not just scattered blocks of code, without a clue of how they relate?

What’s interesting about some of the movements in tooling—like creating code from user stories in GitHub—is that they automate labor, rather than improve or enhance the people who are doing the labor today. However, the Marxist critique here is that the ultimate beneficiary of workplace improvements is never the laborer, but the capitalist (i.e. employer) unless we use the generative AI to de facto train staff as per above.

AI intensifies the sociotechnical issues that already exist

Is your organization struggling today?

If organizations don’t change, then I think it is inevitable that AI will end up accelerating already existing organizational failures.

Others are already struggling and failing with conventional well-known technologies. Because AI yet again—as other tech has already done—disrupts hierarchies, orthodoxies, and expectations, I believe sincerely that many organizations won’t survive such shakeups. And that’s a good thing. But very Darwinistic, too. Maybe I’m more pessimistic than the poor serpent in the image above—maybe there is no rebirth for some organizations?

And many companies are too inept in IT and technology to understand the sociotechnical foundations that they need to put in place for software engineering to actually take place.

This leads to a death spiral in which natural evolution and leadership aren’t pushing “top-down” change widely enough across all these regressive organizations. Just look at what’s happening around agile/agility and continuous delivery — one would be forgiven if one thought such concepts were canon by now, but, alas, they are not.

Conversely, “bottom-up” change isn’t happening, at least easily, since tardiness and lack of foundational skills mean developers are struggling, or even outright fighting back against, organizational directions towards better and professionalized software engineering. It’s not just management that needs to be called out for not wanting change!

To expand on that, I believe the same impulses that are driving traditional enterprise into the gutter—think of the previously mentioned misrepresentation of agile specifically, but software development in general—will become part of the AI “journey” that the same enterprises will similarly violate.

About that workplace improvement…

Time to tie this together. I’ve ranted a bit around two very different models of management pushing generative AI into a software organization.

Effort where it matters

Because AI is, crassly speaking, a type of automation, organizations will need to rethink which activities are allowed to take time — a possibility to put time and effort where it matters. In my experience, there’s too much effort spent on things like guessing value, estimates, priorities, et cetera.

Instead, keep things simple. Start thinking and talking. For a unit of work:

- Does it provide concrete value? This needs to be clarified, evidenced, and shared (OKRs? KPIs?).

- If yes: Does it need to be done at all? Think generic vs unique, strategic value or position…

- If yes: Can it be done by a machine or low-skilled labor? Think repeatability, lack of novelty, opportunities to optimize processes…

- If no: This is where it’s both the time and place to use professional labor, benefitting from any and all automation, AI, and whatever you need to make it happen.

The bulk of the conversation should go in the above questions, balancing the properties of the first persona (quantity-oriented; optimizing) and the second persona (quality-oriented; enabling).

Ergo: AI could come in during the two last, creational “levels”. The purpose of AI is completely different between them because the unit of work itself is understood to be ontologically different depending on which “level” it fits into.

Let’s now talk basic strategies available to each party.

The organization’s main plays

- Less active: Cost reduction. Decides to employ fewer developers because of productivity gains coming from generative AI. Organization wins, worker loses.

- More active: Evolve strategy. Decides to evolve the software practices as well as processes and strategy since the entirety of software delivery is being disrupted. Everyone wins, but both the organization and workers have to adapt to potentially significant changes, soaking up the change and actually doing the work (requiring time, effort, and potential conflict).

The worker’s main plays

- Less active: Status quo. Decides to “game” the benefits of generative AI as a specialized type of work automation, spending less effort for the same pay. Worker wins, organization loses.

- More active: Raise the bar. Decides to champion and double down on qualitative factors with support from the generative AI productivity boost. Everyone wins (except the CFO?), but the organization may struggle (or even stop the change) if software quality as a concept is vague, non-material, and nascent at the C-level.

My reflections here lead me to believe that with such a divisive and big change as generative AI is, there is no strategic play performed by any given (single) side that will create conditions that are mutually beneficial. It almost feels like the Prisoner’s dilemma to me, in that the only way to really “win” with AI is to collaborate.

Go nuts here if you want to read more—

So, to finish: Why the sad face in the introduction? Because I think most organizations are primed to destroy the people who make them work.

Or maybe that’s just me?

Moral of the story:

⚠️ Don’t destroy your people—especially not by believing a word-guessing algorithm can outcompete the organization you should be trying to run.

✅ Instead, I encourage a thoughtful and strategic approach to integrating AI in software organizations to ensure mutual benefits and avoid negative consequences.

I also recommend this article by James Matson, if you are keen on this topic: